Key Takeaways

- Agentic AI is purpose-built for specific tasks, not general interaction. It doesn’t respond to prompts like a chatbot—it operates automatically based on system-level inputs.

- By limiting the scope of agentic AI, we enhance its precision and reliability. This approach removes variability and user-driven input, making the model easier to train, test, and trust for mission-specific outcomes. This precision and reliability are key factors that instill confidence in the performance of agentic AI.

- Agentic AI integrates into automated workflows. This is particularly true in environments like AppSec, where fast, repeatable results are paramount. This emphasis on practical application in real-world scenarios will make your audience feel more informed about using agentic AI.

Introduction

“AI agents” are being talked about everywhere on panels, in keynotes, across product pitches, but ask for a precise definition, and most answers fall short. Are these agents just chatbots with a new name? Are they meant to replace human roles entirely? At Qwiet, our take is different. We’ve built agentic AI with a narrow, focused scope by design because that’s where it works best. In this article, we’ll explain what agentic AI is, how it functions differently from general AI, and why staying tightly scoped makes it more reliable and effective for high-stakes tasks like security.

What Is Agentic AI?

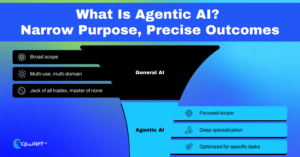

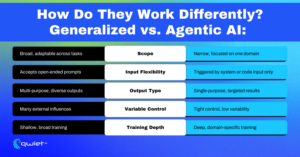

Agentic AI is built for precision. It’s designed to repeatedly carry out one defined task within a tightly scoped set of parameters with minimal variance. Unlike general-purpose AI models, agentic AI is built to be flexible and adaptive. It trades versatility for depth. It’s not designed to generalize across use cases but to excel within a fixed boundary. It doesn’t take open-ended prompts or natural language input. Instead, agentic AI is triggered by specific system events or machine-readable data, such as a completed scan, a new code deployment, or a config state change. These are what we refer to as ‘system-level inputs’.

Once activated, the agent doesn’t ask questions or require interpretation. It consumes structured input, which could be machine-readable data or specific system events, processes it according to its defined logic, and produces output that feeds into the next pipeline step. In practical terms, this means the agent has fewer variables to handle and is easier to optimize. The absence of human input reduces ambiguity. The model can be trained on domain-specific data, tuned for edge cases, and validated against repeatable conditions. That kind of control makes it easier to improve accuracy and performance without chasing general applicability.

In application security, this becomes very concrete. A Qwiet agent might receive a static analysis result, isolate high-confidence vulnerabilities, cross-reference them with project metadata or known exploitability factors, and output a prioritized issue list. It’s not parsing prompts or guessing intent. It’s executing a pre-defined security task that’s been scoped, tested, and versioned for consistency. This structure allows for faster iteration and safer deployment. Because the input-output path is tightly managed, each update to the agent can be validated more thoroughly.

Agentic AI is a controlled tool running in a structured environment, not an open interface reacting to unpredictable user behavior. This control and predictability make it ideal for AppSec workflows, where precision, repeatability, and clarity matter more than conversational flexibility. This emphasis on control and predictability will make your audience feel secure using agentic AI.

How Is Agentic AI Different from Generalized AI?

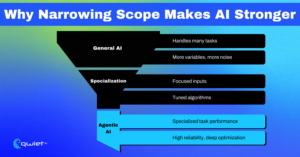

General-purpose AI is built to handle a wide range of tasks. It’s flexible, open-ended, and designed to respond to inputs, usually from humans. That flexibility comes with trade-offs; models have to balance performance across many domains, which often limits their ability to go deep in any one area. They’re tuned to be adaptive, not precise. Agentic AI takes the opposite path. It’s tightly scoped and designed to operate in a single, particular domain. The inputs are limited, the outcomes are fixed, and the focus is on precision and repeatability. This constraint isn’t a limitation, and it’s a design decision that allows the system to perform better within a defined boundary.

From a technical perspective, agentic AI deals with fewer variables and avoids the unpredictability of human interaction. The input is ambiguous. Structured events, not human language, trigger the system, making its behavior easier to manage, test, and refine. That smaller surface area also reduces the likelihood of unexpected behavior. Because the task is clearly defined, agentic models are easier to optimize. Training data can be more focused, inference paths can be narrowed, and performance baselines can be tightly controlled.

Updates to the model are simpler to validate since the intended behavior is constrained and the input domain is stable. This gives development teams more confidence in each deployment. That scope difference makes agentic AI better suited for specific operational tasks, especially in areas like application security. When dealing with repeatable workflows, predictable inputs, and the need for reliable output, reducing complexity in the model makes the entire system easier to reason about and trust.

Why Did We Intentionally Limit Our Agents?

We designed our agents to work within a narrow, well-defined scope so they could be trained with greater accuracy and depth. This setup allows us to refine the models for specific tasks, test them against consistent inputs, and make minor improvements that directly impact output quality. The results are easier to measure and improve when the use case is clear.

Broad AI models tend to introduce variability that’s hard to manage. They can react in ways that aren’t tied to the input or generate results that fall outside what’s expected. Limiting what the agent accepts as input and keeping the output task-specific lowers the chances of drifting into unintended behavior. This helps keep the model focused and makes testing more straightforward. Security work doesn’t leave much room for guesswork. We need tools that respond predictably when analyzing code, identifying risks, or delivering findings.

When the input is consistent and the model is tuned to respond in one specific way, it’s easier to evaluate whether it’s working. This also helps ensure that teams trust the output and know how to act on it. That’s why we’ve limited our agents to system-level inputs like scan results or code changes. They don’t respond to broad prompts and are not designed to interpret open-ended questions. This structure keeps them connected to the task, makes them easier to maintain, and better suits them for real work inside production pipelines.

Best Ways to Use Agentic AI (and Avoid Misuse)

What Agentic AI Is Good At

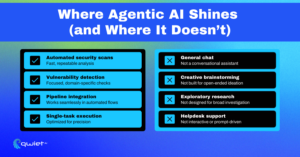

Agentic AI works best when it’s tied to a clearly defined task. It’s built to run repeatable jobs that don’t require human interaction to start or complete. When an input is consistent and the expected output is known, an agent can handle that task faster and more predictably than broader AI systems. One of the strengths of agentic AI is its ability to integrate into systems that run automatically. It can slot into pipelines that execute code commits, configuration changes, or analysis events, places where waiting on manual intervention would slow everything down. The agent reacts to signals from the system, not instructions from a person.

It also performs well when accuracy is the priority. Since its inputs are controlled and its behavior is tightly scoped, it can be tuned for consistent results. That helps reduce false positives and makes the output easier to interpret. It doesn’t try to guess what to do; it runs what it’s been built to do. This structure is a good fit in environments like AppSec. An agent can take a security scan as input, prioritize findings based on policy, and send structured output into a ticketing or alerting system. It does this automatically, without needing a user prompt or decision point in the middle of the workflow.

What It’s Not Good At

Agentic AI isn’t built to be a general assistant; it doesn’t handle casual input, switch between unrelated tasks, or respond to prompts like a conversational model. The agent cannot adapt in real time if the job isn’t clearly defined. It also doesn’t interact with you like a chatbot. There’s no back-and-forth, clarifying questions, or way to steer the conversation. Once triggered, it runs a fixed task and outputs the result. Any interpretation or follow-up happens elsewhere in the system. You’ll likely hit limits fast using agentic AI for tasks requiring flexible input. These agents aren’t meant for creative generation, research queries, or variable human interaction. That’s not a gap, it’s part of the design. They were built to solve specific problems, not to handle open-ended requests.

Good use cases include automated security analysis, data extraction from structured inputs, and decision support based on known signals. Misuse usually happens when teams expect it to respond like a broader model, something it’s not meant to do. Keeping it within its lane helps maintain performance and keeps workflows clean.

Conclusion

Agentic AI isn’t designed to mimic human behavior or respond to every question; it’s built to perform focused, automated work precisely. By limiting scope and removing unnecessary complexity, we make it easier to train, deploy, and trust in production workflows. The result is an AI that delivers faster and with fewer unexpected outcomes. Teams using AI in AppSec should prioritize targeted performance over broad capability.

Book a demo with Qwiet to see how precision-built agents can level up your pipeline.

FAQs

What is agentic AI?

Agentic AI is artificial intelligence designed to perform a specific task within a defined scope, triggered by system events rather than user prompts. It’s optimized for automation, not conversation.

How is agentic AI different from general AI?

Agentic AI operates within strict boundaries and doesn’t respond to open-ended inputs. General AI is built for flexibility, while agentic AI focuses on precision and repeatability in a single domain.

What are some use cases for agentic AI in cybersecurity?

Agentic AI can automate tasks like vulnerability triage, policy enforcement, and risk prioritization using static or dynamic scan data, making it a strong fit for CI/CD pipelines.

Can agentic AI replace security analysts?

No. Agentic AI supports analysts by handling repeatable tasks at scale, but doesn’t replace human judgment. It works best when paired with broader review and escalation workflows.

Why is limiting the scope of agentic AI beneficial?

Keeping agentic AI tightly scoped makes it easier to tune, test, and deploy reliably. It reduces noise, improves output quality, and makes the system more predictable in production environments.