Key Takeaways

- Understanding the security risks introduced by machine learning systems beyond the model layer is crucial. These risks include outdated dependencies, weak secrets management, and architectural mismatches in legacy codebases.

- Secret sprawl is a growing concern, especially with AI agents embedded in CI/CD workflows. This trend makes runtime exposure, hardcoded credentials, and misconfigurations increasingly difficult to control, potentially leading to serious security breaches.

- The end of open source Vault is a pressing issue that forces teams to rethink their secrets management urgently. This requires weighing alternatives and ensuring new tools fit into fast-moving, developer-centric pipelines.

Introduction

AI and machine learning are standard parts of modern software stacks, from user-facing features to behind-the-scenes automation. But as adoption increases, so do the risks. Security challenges aren’t limited to model behavior; they also extend to legacy systems, CI/CD pipelines, and secrets management practices that support these workloads. In this article, you will learn how legacy codebases, mismanaged secrets, and changes like HashiCorp’s Vault license shift introduce new security concerns and what engineering teams can do to stay ahead.

Legacy Code Meets Modern Threats

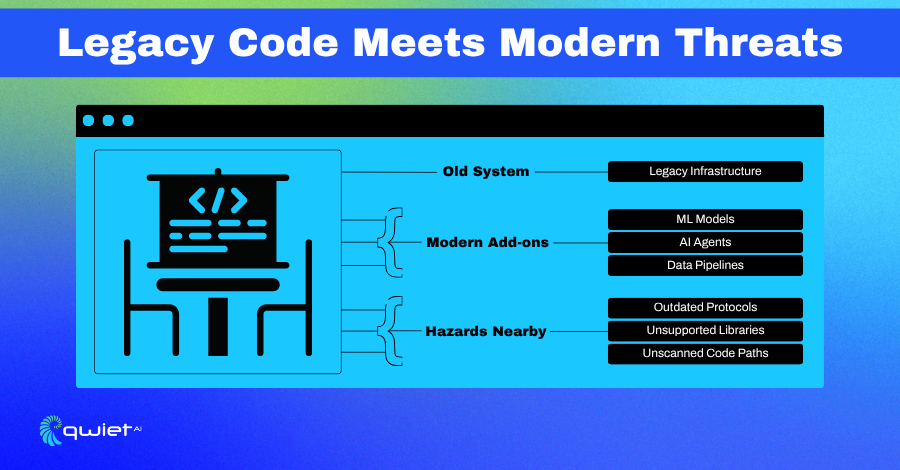

When ML Meets Outdated Systems

Integrating machine learning into older systems often means forcing new workflows into codebases that weren’t designed for it. These legacy platforms may still run on outdated runtimes, use custom frameworks, or rely on assumptions that predate current security models.

When you introduce ML workloads, which often need fast data access, external calls, and constant model updates, you’re layering dynamic behavior onto brittle infrastructure.

This creates unstable boundaries between components. For example, you might call a cloud API from a codebase that still uses TLS 1.0 or rely on Python environments that haven’t been patched in years. These systems often lack proper service segmentation or runtime isolation.

That makes it harder to control what data flows where, and nearly impossible to enforce fine-grained security policies. Before evaluating the ML layer, developers often debug race conditions, dependency conflicts, and access issues.

Blind Spots Born from Technical Debt

Older stacks typically have unresolved security debt: no longer maintained libraries, implicit trust between services, and logging mechanisms that miss essential signals. These gaps become more dangerous when machine learning systems are added.

ML components often require broader access to data, computation, and external APIs, which amplifies the exposure of already weak parts of the stack.

Security tooling often hits a wall in these environments. Static scanners may not accurately interpret custom loaders or older programming languages. Dynamic tools like IAST or instrumentation agents frequently break or give incomplete data because the stack doesn’t support modern introspection hooks.

Even basic detection, such as hardcoded secrets or exposed credentials, becomes more challenging when the tooling can’t fully comprehend the execution model or dependency tree. The result is vulnerable code paths that go unflagged, not due to lack of effort but because the architecture actively obscures them.

The New Danger of Secrets Sprawl

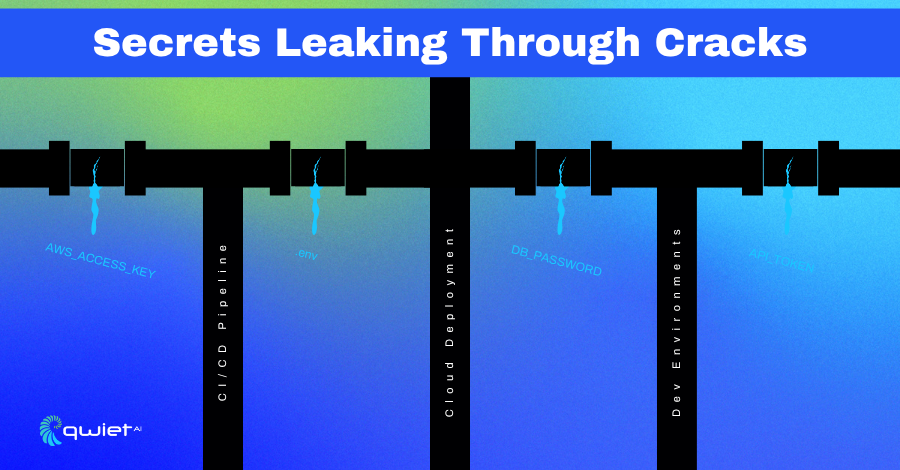

Secrets Everywhere, Access Always On

Machine learning systems depend highly on various services, including remote models, external APIs, feature stores, and data warehouses.

Each touchpoint requires authentication, which means managing secrets, including API tokens, keys, service credentials, and configuration files. These secrets are often embedded directly into code, test files, or configuration scripts in fast-moving environments to avoid blocking deployment or experimentation.

That quick-fix habit spreads fast. It’s common to find credentials left in notebooks, old environment files, or tucked into CI/CD pipeline variables without proper access control. The more distributed the infrastructure becomes, the harder it is to keep track of who has access to what, and whether those secrets have been rotated, scoped, or even logged.

They can still surface at runtime even when secrets are technically stored outside the repository, such as in cloud-managed vaults or secrets managers. Without strict policies and continuous monitoring, these runtime exposures can become attack vectors, especially when logs, crash dumps, or memory snapshots inadvertently leak them.

More Automation, More Exposure

The risk of secret exposure increases as AI agents and ML-driven automation are integrated into CI/CD workflows. These agents often operate across environments that span build, test, and deployment, requiring access to multiple credentials.

The default configs that make automation easy usually skip granular access control. That means over-permissioned tokens, long-lived credentials, and reused secrets across environments.

In high-velocity pipelines, secrets are frequently passed as environment variables or injected into containers. If you’re not scrubbing logs or locking down access controls, it’s easy to leak credentials into build artifacts or deployment metadata. And once something leaks into version control, the blast radius can be hard to contain, especially in larger organizations with blurry access boundaries.

Secrets scanners help catch leaks, but they’re only as effective as the context they’re given. A token might not look dangerous unless the tool can tie it to a real privilege. Environmental misconfigurations, such as permissive IAM roles or overly exposed network scopes, often fall outside the scope of basic scanning. What’s needed are tools that understand how secrets are used, not just where they appear.

HashiCorp Vault Winds Down Open Source – What Now?

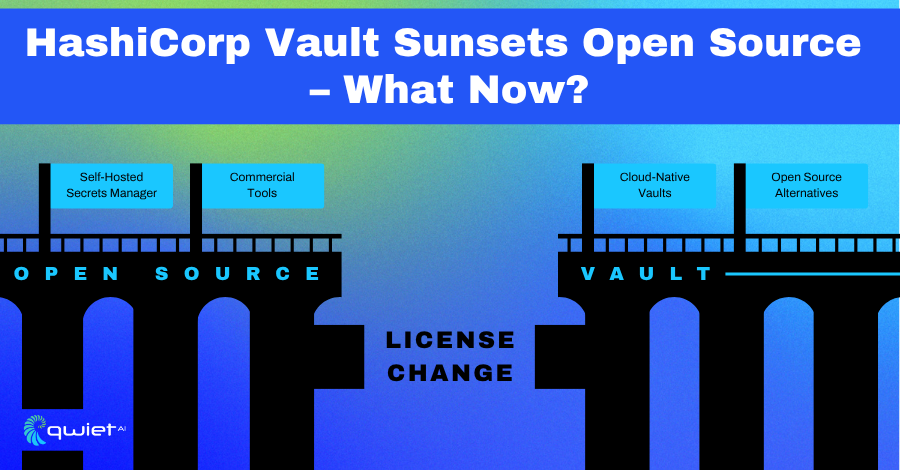

Understanding the Licensing Shift

HashiCorp’s move to the Business Source License (B.S.L.) for Vault marked a clear break from its previous open-source model. While the code remains publicly viewable, restrictions on commercial use have caused a ripple effect for teams that built their secrets management strategy around open access and self-hosted flexibility. Projects that relied on community contributions, integrations, or downstream forks are now left with uncertainty.

For security teams, this change isn’t just philosophical; it introduces real questions about long-term support, operational freedom, and integration reliability. If Vault is embedded into pipelines, infrastructure provisioning, or authentication flows, a licensing change touches everything from compliance reviews to how updates are handled. Teams now need to assess whether their usage still aligns with the new terms, and what it would take to migrate if not.

Rethinking Your Secrets Strategy

The Vault licensing shift has prompted many teams to explore alternatives, including open-source and commercial options. Projects like Infisical, Doppler, and Akeyless are seeing renewed attention.

Some offer similar feature sets, including secret rotation, policy-based access, and audit logging, while others focus on integration depth or ease of use. What matters most is whether the replacement can be integrated into your stack without disrupting your deployment patterns or increasing operational load.

Before switching, teams should evaluate a few non-negotiables: first-class API support, zero-downtime secret rotation, fine-grained access control, and compatibility with infrastructure-as-code workflows.

If you’re deeply involved in Terraform, Kubernetes, or cloud-native automation, these factors matter more than branding or UI. The right tool supports your delivery model without getting in the way, especially when managing high-velocity pipelines or working across multiple teams and environments.

How Security Teams Can Stay Ahead

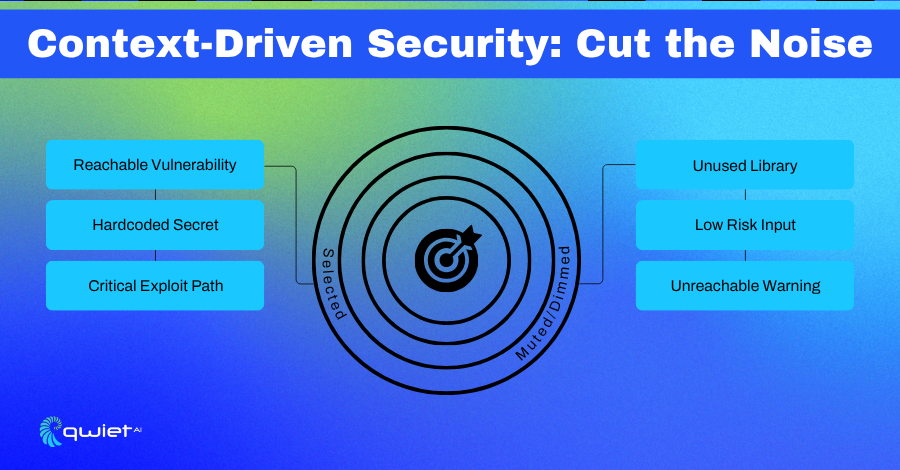

Contextual Detection That Developers Can Act On

Security tools that flag every potential issue without understanding the execution context aren’t helping anyone. Findings that don’t tell you if data can reach a vulnerable point in the code become noise.

Contextual detection, as provided by Qwiet AI, solves this by tracing actual paths through the application. It evaluates how inputs flow, whether a vulnerability is exposed, and under what conditions it’s exploitable.

This matters in real workflows; if you’re examining a tainted data flow, you want to know whether it passes through sanitization, triggers an authentication check, or reaches a potentially dangerous sink, such as exec or eval.

That level of depth helps you decide what to fix now versus what can be logged and monitored. It saves time during triage, providing developers with cleaner, focused, testable, and actionable guidance.

Practices That Support Both Speed and Safety

You don’t need a perfect system to start making security more manageable; you need repeatable workflows that work with how you ship code. Rotate secrets as part of your deployment pipeline.

Add secrets scanning to CI, with clear ownership for handling leaks. If you’re stuck with legacy code, isolate the high-risk areas first. Even basic modularization or version pinning can lower risk while you plan longer-term refactors.

The goal isn’t to lock things down; it’s to make secure choices part of the typical workflow. Tools that surface context-aware findings directly in pull requests or fail builds only when real risk is involved help strike that balance. Developers stay in flow, but security still has guardrails. You move fast, fix what matters, and avoid the burnout of reacting to alerts that don’t go anywhere.

Conclusion

Machine learning pushes boundaries and expands the attack surface in subtle, complex ways. Staying secure means looking beyond the models to everything they touch, from legacy systems to runtime environments and the secrets that tie them together. Teams that prioritize contextual detection, secret hygiene, and scalable tooling will be better equipped to handle these changes. Book a demo and look closely to see how Qwiet AI helps teams achieve this.

FAQs

What are the main security risks in machine learning environments?

Beyond model attacks, risks include legacy code integration, secrets exposure, misconfigured CI/CD pipelines, and unpatched dependencies that create blind spots in traditional security tools.

Why is secret sprawl a concern in ML and AI systems?

ML systems need access to APIs, models, and databases, all requiring secrets. These are often hardcoded or mismanaged across environments, increasing the risk of leaks and unauthorized access.

What happened to HashiCorp Vault’s open source license?

HashiCorp moved Vault to a Business Source License (BSL), which restricts commercial use and raises concerns for teams that rely on Vault for secrets management within open-source stacks.

What are the alternatives to HashiCorp Vault for secret management?

Alternatives include Infisical, Doppler, Akeyless, and other tools that support secret rotation, access control, and integrations with modern infrastructure-as-code and CI/CD workflows.

How can security teams better support ML in legacy environments?

Focus on contextual vulnerability detection, integrate secrets scanning into pipelines, and gradually modernize legacy systems to reduce exposure without disrupting delivery speed.