Key Takeaways

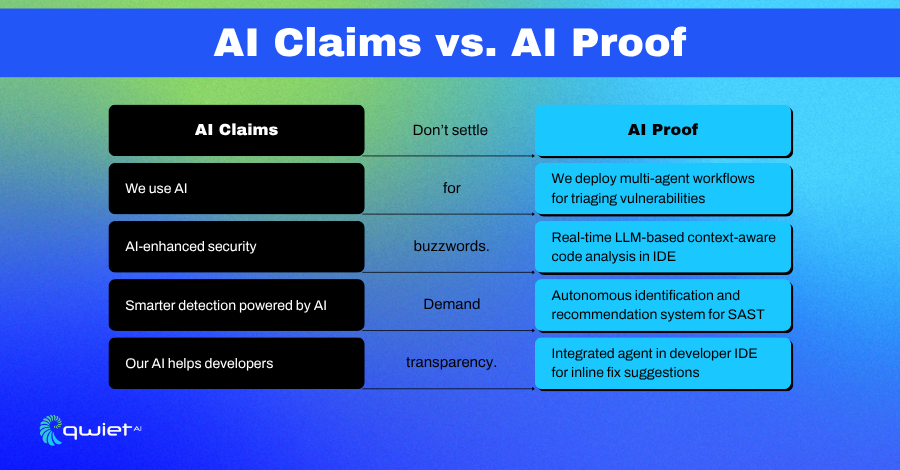

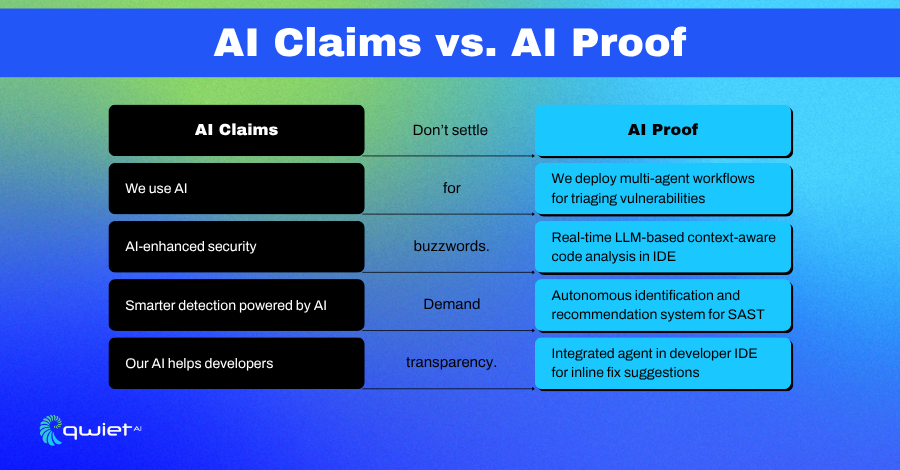

- Claiming that AI alone is not sufficient proof is one thing; real value comes from demonstrating how AI actually functions, not merely stating that it exists.

- AI-washing erodes trust. Vague claims or superficial integrations damage credibility across the AppSec space.

- Agentic, transparent systems win. Teams should look for tools that integrate AI deeply, explain outputs, and adapt to developer workflows.

Introduction

LLMs are everywhere; nearly every vendor claims to have AI in their platform. But most of those claims don’t hold up under scrutiny. We’ve seen this before: buzzword hype with little substance. In AppSec, where real outcomes matter, what separates noise from signal is proof. The companies that will stand out can demonstrate what their AI does, where it fits, and how it helps rather than just claiming to have it.

Welcome to the AI Tsunami

Standing on the Beach, Watching the Water Pull Back

It feels like everyone hit the AI button at the same time. In the span of months, nearly every product announcement, roadmap, or investor pitch now includes some kind of AI story. The sudden acceleration has left most teams scrambling to either bolt something on or reframe what they already have.

You can see the panic in the way companies are reacting. Some are acquiring anything with a model and a few use cases. Others are pivoting entire platforms without a clear strategy to stay in the conversation. It’s reactive, not deliberate.

There’s also a growing fear of being left behind. That fear drives decisions made in haste, without a thorough understanding of what is needed to make AI work in production. It’s the kind of noise that creates short-term wins and long-term problems.

Where Hype Outpaces Readiness

AI doesn’t work just because a company says it does. But that hasn’t stopped vendors from rushing to stamp “AI-powered” on anything that even touches an LLM. Very few teams are prepared to build, maintain, or explain real AI systems at scale.

This wave differs from past technological shifts; it’s not just a new feature but changes how systems process information, interact, and learn. That requires actual thought, not just wrappers around someone else’s API.

What’s coming next won’t be about who said “AI” first. It’ll be about who did the work, made something real, integrated it properly, and showed how it helps users today. That’s the bar that’s coming. The ones who hit it will stand out. The rest will get washed out.

AI Washing Is the New Vaporware

We Use AI, But What Does That Even Mean?

“AI” is quickly becoming the new check-the-box phrase. It’s in pitch decks, product one-pagers, and conference booths. However, more often than not, there is no explanation behind it, no clarity on what the AI does or how it fits into the product.

It’s easy to claim AI powers your platform when that means calling a public API and wrapping the response in a UI. Buyers are starting to see through this, and when they dig in, they often find very little behind the curtain.

This isn’t just a branding issue; it erodes trust. Teams with genuine AI talent that solve real-world problems often get lumped in with vendors making empty claims. As buyers are burned, the entire category becomes harder to sell with confidence.

The Cost of Lazy Claims

There’s a reason you hear jokes like, “Our AI does AI things.” It’s satire, but it’s also a fair callout. When companies promote AI without providing details, examples, or explainability, it signals a lack of depth. It’s not leadership, it’s hand-waving.

Engineering and security leaders quickly spot gaps. A platform that can’t explain how its AI makes decisions, where the models are used, or how the outputs are validated raises red flags, and that scrutiny will only increase.

Teams don’t need slogans. They need transparency. And in a crowded space, those who can speak clearly about how their AI works, what it does, where it resides, and why it matters are the ones people will take seriously.

Real AI vs. API Wrappers

If Your AI Is Just an API Call, You’re Not an AI Company

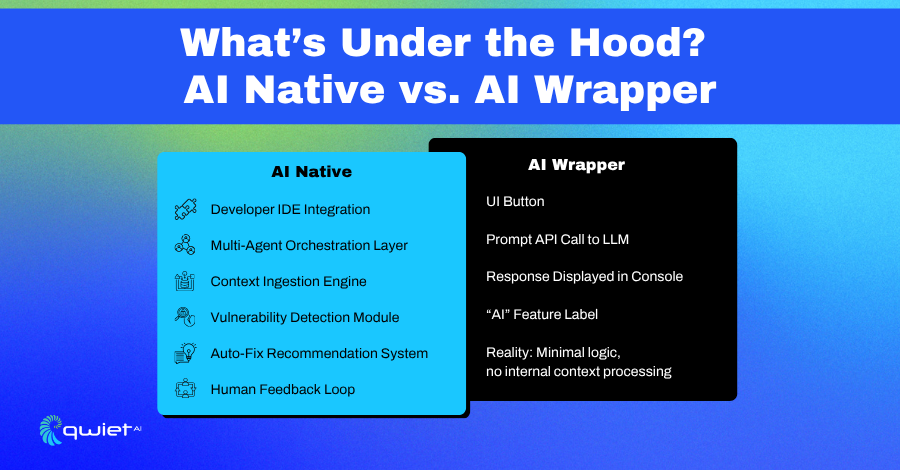

We’ve reached the stage where simply routing data to ChatGPT qualifies as an AI strategy for some. Many vendors are taking this shortcut and calling it innovation. It’s not. It’s outsourcing the intelligence to someone else’s system and slapping a label on the response.

There’s nothing wrong with calling an external model when it helps. But you’re not building AI when that’s the entire solution without any meaningful orchestration, logic, or workflow control. You’re relabeling someone else’s work.

The difference shows up quickly in practice. Tools that rely only on external calls don’t learn from the environment they operate in. They can’t apply domain knowledge, correlate across codebases, or adapt to the context of a specific workflow. That limits what they can deliver.

Building AI That Works With You

Real AI-native systems don’t just call models; they manage state and reason across inputs and assist through structured decisions. They know when and how to act based on signals from the environment. That turns a tool into a workflow participant, not just a reactive output generator.

At Qwiet, we’ve built agentic workflows from the start. That means our AI doesn’t just generate responses, it assesses, correlates, prioritizes, and learns from the developer environment it’s embedded in. It can explain what it’s doing and why.

We don’t need to exaggerate what our platform does; we understand how each agent works, its contribution to the outcome, and the boundaries it respects. That transparency matters because it makes the tool predictable, reliable, and safe in production pipelines.

Preparing for the Wave, Not Just Posing for It

Building for What’s Coming, Not Just What’s Trending

The AI wave isn’t coming; it’s already here, but not all platforms are ready. Many are still scrambling to bolt AI onto legacy systems without understanding how it should work or where it adds value. Those efforts might look good in a demo, but won’t hold up under real-world pressure.

In AppSec, that pressure manifests in fast-moving CI/CD pipelines, massive codebases, and development teams that need clarity, not more noise. The tools that will make it through this shift are those built to support these realities with explainable systems, seamless integration, and consistent performance.

You can’t fake context. If a tool doesn’t understand where it sits in the workflow, what developers are doing, or how security teams prioritize risk, it won’t last. That’s why depth matters: surface-level AI doesn’t solve anything. It just adds layers to the problem.

Real AI Needs to Show Up Where the Work Happens

AppSec platforms that support both security practitioners and developers throughout the workflow are the ones moving forward. That doesn’t mean pushing every alert earlier or adding more checks; it means offering help that lands at the right moment, in the correct format, with the proper context.

Specificity is what distinguishes fundamental tools from imitations. When AI can pinpoint an issue, explain why it matters, and suggest a fix that respects the codebase, it earns its place in the stack. Generalized responses or vague suggestions aren’t helpful; they create more review cycles, questions, and less trust.

We’ve built our AI to deliver that specificity from agent-driven triage to context-aware recommendations, and everything is designed to reduce guesswork. When teams can rely on the signals coming from their security tools, they move faster and make better decisions. That’s the baseline now, not the future.

Conclusion

The AI flood has arrived, but not everyone has built something real. Don’t settle for a label, look for what’s under the hood. The platforms that can explain their AI, apply it responsibly, and deliver results will be the ones that last. We’re building AI that delivers results, not just talks a big game. We’d gladly show you if you’re curious how that looks in practice. Book a demo and see it in action.

FAQs

What is AI-washing in cybersecurity?

AI-washing refers to vendors labeling their tools as “AI-powered” without meaningful integration, detail, or value behind the claim.

How can buyers identify fundamental AI tools in AppSec?

Look for platforms that explain what their AI does, where it operates, and how it supports users—transparency is a good litmus test.

Why is transparency in AI use important for AppSec?

Without visibility into how AI makes decisions, teams can’t trust outputs. That lack of trust in security leads to friction and slower response times.

What separates AI-native tools from API wrappers?

AI-native tools handle decisions internally, with context and state-awareness. Wrappers simply relay data to external models, such as ChatGPT.

How does Qwiet use AI differently from other vendors?

Qwiet builds agentic workflows that evaluate context, prioritize risk, and integrate directly into developer environments for clear, valuable insights.