Key Takeaways

- AI has transformed both software development and the threat landscape. Code is being generated faster than ever, often without the context or security awareness needed to keep it safe. This demands a new approach to how we think about application security.

- Traditional AppSec tools fall short in modern, AI-assisted workflows. Legacy scanners struggle to interpret the structure and behavior of AI-generated code, resulting in excessive noise and missed risks that matter.

- Context-aware, developer-first security tools are the path forward. Tools like Qwiet AI analyze code structure, prioritize based on exploitability, and integrate directly into developer workflows, helping teams secure code more quickly and accurately.

Introduction

AI has changed the way we write software. It’s accelerated development to a pace that would’ve been hard to imagine just a few years ago. We’re seeing entire features scaffolded in minutes, code refactored in seconds, and pull requests populated by machines instead of teammates. But that speed comes with trade-offs. More code means more potential vulnerabilities, and AI doesn’t always understand the security implications of what it generates. In this article, you’ll learn how the application security landscape has shifted in response to AI, what risks are emerging from this transition, and how AppSec needs to evolve to stay relevant.

AppSec Then vs. Now

AppSec a Decade Ago

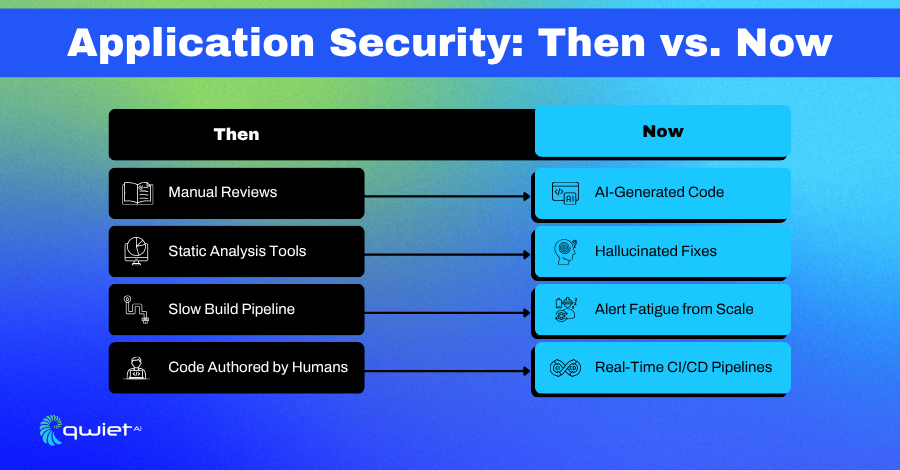

Ten years ago, application security was slower, more hands-on, and tightly scoped. Security teams had time to dive into codebases, talk to developers, and reason through design decisions.

Reviews were mostly manual, which made them more meaningful. When static analysis was used, it was applied to smaller, better-understood codebases. Dynamic testing can simulate attacks, but these simulations occur in well-defined environments.

Tooling wasn’t perfect, but the signal-to-noise ratio was manageable. A finding usually meant something worth looking into. When a vulnerability was flagged, it typically led to a conversation with someone who wrote the code and could explain what it was supposed to do.

That environment made it easier to build trust between the security and engineering teams. You could spend time figuring out not just whether a piece of code was exploitable, but why it was written that way in the first place. That context made it possible to fix problems at the root instead of chasing symptoms.

AppSec in the AI Era

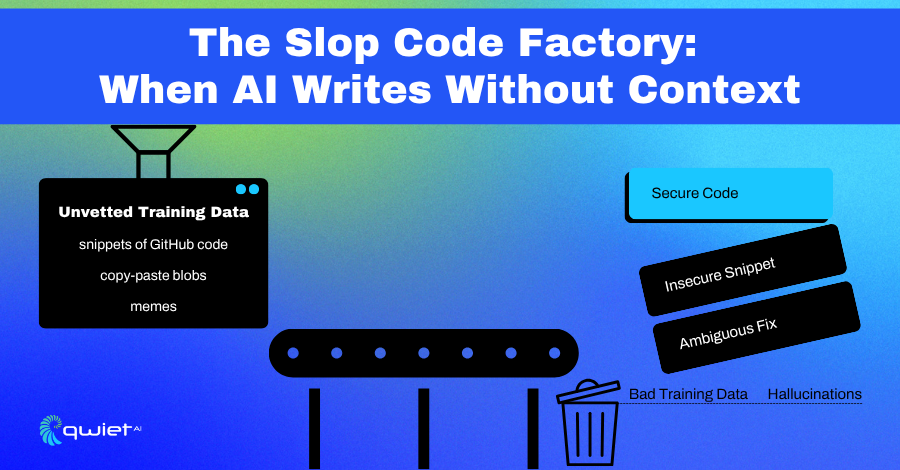

That model no longer holds up today; code is generated faster than it can be reviewed. Developers aren’t always the authors. AI tools generate large volumes of code with little to no traceability, often skipping documentation and tests altogether. Repositories are filling up with logic that works well enough to compile or run, but lacks the intent, reasoning, or context behind its construction.

Security teams are now dealing with a different class of risk code that appears syntactically correct but is semantically weak or misleading. We’re seeing a rise in fragments that follow surface-level best practices but fail in deeper, structural ways. This is the new reality of “slop code”: output that’s functional but hollow, with security implications that aren’t obvious until runtime or post-deployment.

It’s no longer just about vulnerabilities; it comes to understanding what this code was intended to do, whether it belongs in the application at all, and whether it has been human-reviewed. AppSec now needs to fill the gaps left by the speed and opacity of AI-assisted development.

The Risks of AI-Generated Code

Lack of Context and Security Awareness

AI-generated code often misses the deeper context that experienced developers build into their work. It can replicate syntax, match patterns, and mirror the shape of a solution, but that’s not the same as understanding the problem being solved.

When the input prompt is vague or the training data lacks nuance, the output tends to be shallow. It might pass a basic test, but it doesn’t usually account for edge cases, security constraints, or operational realities.

This lack of depth introduces risk without awareness of the application’s architecture or the data it handles. The code produced by AI may inherently introduce vulnerabilities. Safe defaults, proper input validation, and access control logic aren’t guaranteed unless they’re explicitly requested. And even then, they’re often applied generically, without alignment to how the system works.

Hallucinated Fixes and Feedback Loops

There’s also a growing issue with AI-generated fixes and suggestions that look correct but fall apart under scrutiny. These “hallucinated fixes” appear as patches that silence a warning or pass a test, but don’t address the underlying problem. They can create a false sense of security, especially in environments where code is reviewed quickly or merged automatically.

The bigger concern is how this compounds over time. When flawed AI-generated code ends up in production, it eventually feeds back into the training loop. Future models then learn from that flawed output.

We end up with a cycle where weak code quality becomes normalized. The ecosystem starts generating and learning from its own mistakes at scale. That’s not theoretical anymore. It’s happening in real-world systems, and the impact is already evident in security findings.

Security Tools Can’t Keep Up

Static and Dynamic Tools Fall Short

Legacy security tools were built around a different kind of software. Static analysis (SAST) engines look for known patterns in human-written code. Dynamic Application Security Testing (DAST) simulates inputs to identify behavioral flaws in running applications. Both methods still have value, but they struggle when applied to AI-generated code that doesn’t follow conventional logic or structure.

The assumptions baked into these tools about how data flows, how functions are composed, and how developers typically write code no longer apply cleanly. AI doesn’t write with intention or pattern recognition the way humans do. It stitches together statistically likely fragments.

That makes traditional pattern matching unreliable and incomplete, and gaps begin to appear between what tools flag and what truly matters.

Signal vs. Noise Without Context

When scanners lack context, they compensate with volume. That’s where developers get buried in false positives. Warnings that look urgent but aren’t actionable.

Findings that trigger alerts but don’t affect runtime behavior drain time and energy from teams trying to keep up with fast-moving development cycles.

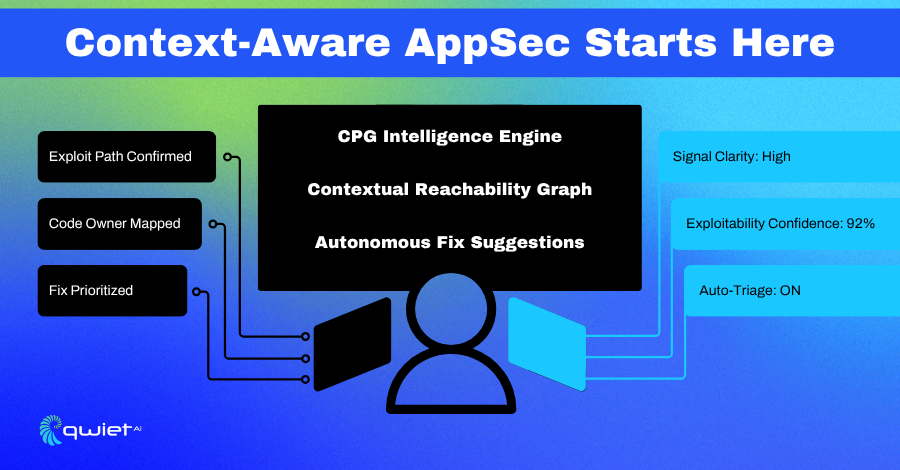

What’s missing is depth. Without code property graphs (CPG) and real reachability analysis, there’s no clear view into whether a flaw is exploitable. That means the noise drowns out the signal, and the risks that matter most go unnoticed.

AppSec teams need to see not just that something might go wrong, but where it fits in the bigger picture and whether anyone can reach it in the first place.

A Smarter Path Forward

Built with Context, Tuned for Developers

When both humans and machines write code, security tools need to recognize more than syntax. Qwiet AI is designed to understand how that code functions. It doesn’t just look for insecure patterns; it maps behavior, data flow, and intent. That means fewer false positives, less wasted time, and more confidence in the results you’re acting on.

Security tools that treat every finding the same don’t scale. Qwiet AI focuses on code context: how user inputs move through functions, where they intersect with sensitive operations, and whether those interactions are reachable under absolute execution paths.

That extra layer of depth changes how you triage findings. You’re no longer chasing theoretical risks but working with ones that matter to your stack.

The way issues are presented also matters. Instead of generic alerts, Qwiet AI links findings directly to the parts of the codebase developers are working on.

You can see precisely how a vulnerability propagates, what data it touches, and what conditions lead to exposure. It’s actionable in the way a well-documented PR comment is actionable: fast to understand, and clear in what needs to be done.

Graph-Based Analysis and Agent-Led Triage

Qwiet AI uses code property graphs (CPGs) to unify AST, control, and data flow into a single structure. That lets it reason about your application like a senior engineer might: tracing execution paths, understanding conditional logic, and mapping how data passes between modules. This isn’t signature matching. It’s structural reasoning.

The system also applies agentic AI to sort through what it finds. Instead of flagging CVEs or known vulnerabilities, it calculates exploitability based on reachability and privilege levels.

It determines whether a tainted variable is passed to a potentially hazardous function, whether authentication checks protect it, and whether it’s part of a legitimate user interaction. That drastically narrows the surface area of things you need to fix.

This type of analysis alters the feedback loop; you’re not sifting through hundreds of alerts to find something actionable. You’re working from a short list of high-confidence, high-impact findings ranked by how much risk they introduce in your environment. It saves hours of guesswork.

Securing Code at Dev Speed

Release cycles aren’t slowing down; teams are shipping multiple times daily, often with code generated or heavily assisted by AI. Security needs to keep up with that pace without dragging engineering into review loops that don’t produce results.

That starts with embedding analysis directly into the workflows developers use, such as PRs, IDEs, and CI pipelines, without blocking the path to production unless something hazardous is being introduced. The goal is to fit security into your flow, not force new ones.

Qwiet AI makes that integration smoother; it gives you a real-time view into whether a piece of code introduces risk, how exploitable it is, and what needs to change. You don’t need to be a security expert to understand the finding. The tool meets you at your level, provides clear signals over noise, and respects your time. That’s what scaling security in modern development looks like.

Conclusion

AI hasn’t just changed development; it’s reshaped the threat model. We build, test, and release faster than ever, and security must evolve simultaneously. AppSec teams can’t rely on workflows built for slower cycles. They must work closely with where code lives and help developers make informed decisions quickly. That means using context, identifying what’s truly reachable, and cutting through noise. To maintain stability without increasing risk, security must scale with development. Qwiet AI is built for that shift. If you’re ready to see how it fits into your environment, book a demo and look closer.

FAQS

How has AI changed application security?

AI has accelerated software delivery, but it’s also introduced new risks. AI-generated code often lacks context, architectural alignment, and adherence to security best practices. This shift forces AppSec teams to rethink how they detect and prioritize threats in high-velocity environments.

What are the risks of using AI-generated code in software development?

AI-generated code can appear functional but lack critical security measures, such as input validation, access control, or error handling. These gaps may not be evident during testing but can create real vulnerabilities in production systems.

Why do traditional AppSec tools struggle with AI-assisted development?

Conventional tools like SAST and DAST often rely on pattern recognition and static rules. AI-generated code may not follow predictable patterns, leading to a flood of irrelevant alerts or overlooked risks. This misalignment wastes developer time and reduces trust in the tools.

How does Qwiet AI improve code security in modern development environments?

Qwiet AI combines code property graphs and AI-driven prioritization to analyze code based on structure and data flow. It focuses on what’s exploitable and relevant, offering clear, contextual insights that help developers act more quickly and confidently.

What should AppSec teams do to adapt to AI-influenced development workflows?

Teams must shift everywhere, with tools integrated into CI/CD pipelines and developer environments. They should focus on tools that reduce noise, highlight real risks, and scale with the pace of code generation. Qwiet AI is built with this in mind.