Introduction

As AI becomes more embedded in our applications, it creates new security challenges. Adversarial AI is a growing threat, with attackers using AI to exploit vulnerabilities and bypass defenses. In this blog, we’ll break down how adversarial AI works, the different types of attacks it can launch, and practical strategies for defending your applications. By reading this, you’ll gain insight into the risks adversarial AI poses and the steps you can take to strengthen your security.

How Adversarial AI Works

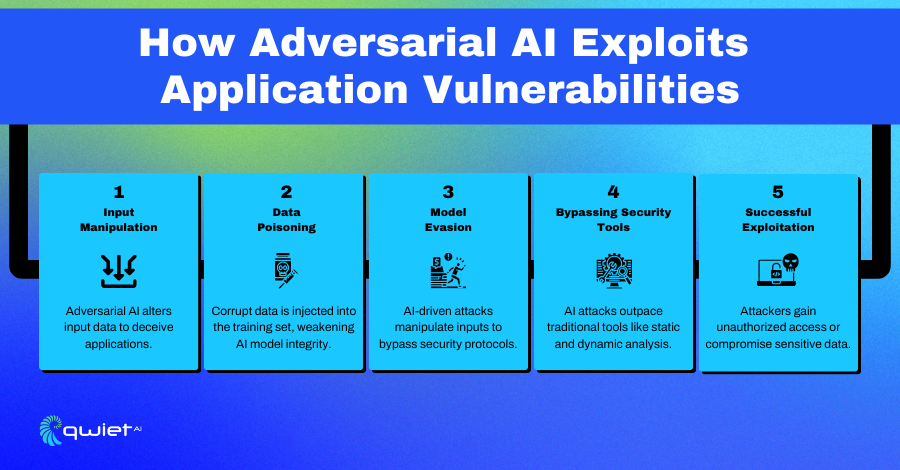

Adversarial AI involves deliberately messing with machine learning models to exploit their weaknesses and get around security measures. In application security (AppSec), this could mean targeting the AI models that handle authentication or fraud detection, making them behave in ways they’re not supposed to. Attackers might manipulate inputs or the environment to trick the AI into making wrong decisions, like giving access to someone who shouldn’t have it or missing a security threat. Considering AI vulnerabilities as part of any solid AppSec strategy makes it important.

A real-world example of adversarial AI in action is the Tesla Autopilot attack. In this case, attackers modified road signs or environmental cues to fool the car’s AI into misinterpreting what it “saw.” These subtle manipulations, undetectable to humans, caused the car to make dangerous decisions, like speeding up or taking wrong turns. It’s a striking reminder of how adversarial attacks can target AI in ways that directly impact safety.

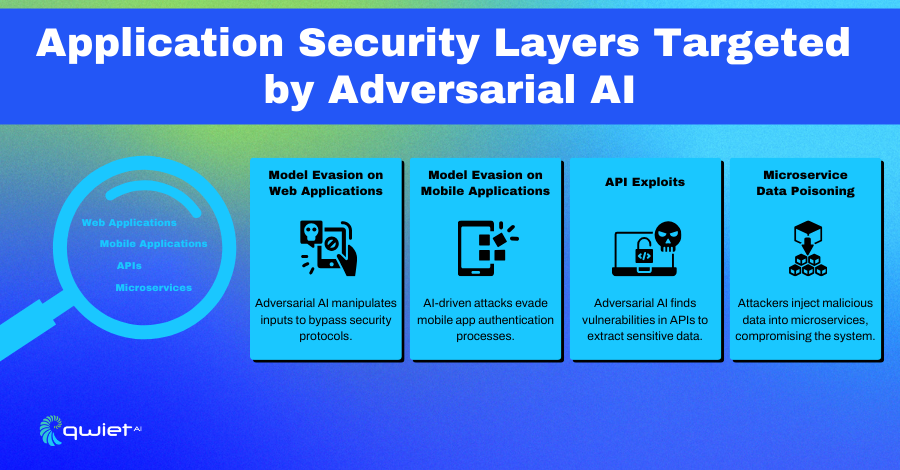

As AI threats evolve, they’re becoming more advanced, especially regarding application security. Attackers are now using AI to automate vulnerability scanning, enabling them to find weaknesses in web apps, APIs, and microservices faster than ever. This rapid development makes adversarial AI a growing concern, pushing security teams to look closer at how they defend against these increasingly sophisticated attacks.

Types of Adversarial AI Attacks in AppSec

In application security (AppSec), adversarial AI attacks take several forms, each designed to exploit weaknesses in AI systems.

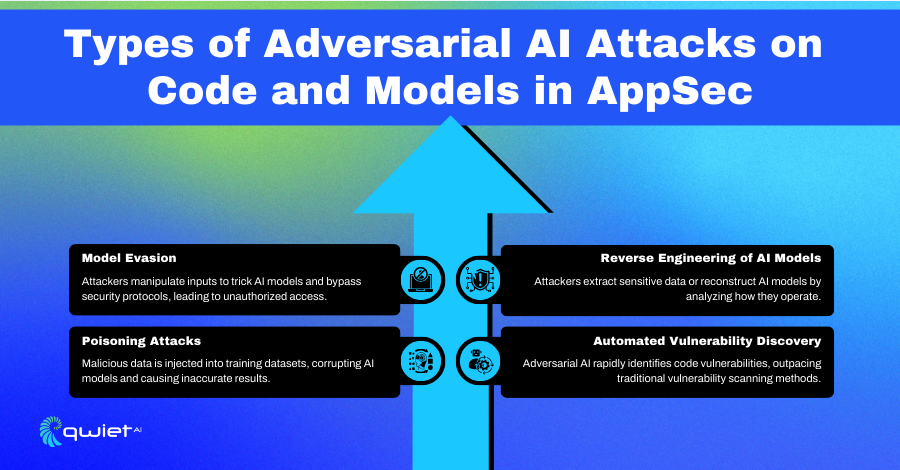

- Model Evasion: Attackers manipulate inputs to trick AI models into bypassing security defenses. They create inputs designed to confuse the model, allowing malicious activity undetected.

- Poisoning Attacks: This happens when attackers sneak malicious data into the training sets of AI models. It messes up the model’s learning process, causing it to make bad decisions or predictions down the line.

- Reverse Engineering of AI Models: Attackers study the outputs of AI models to reverse-engineer them. This lets them extract sensitive information or determine how to exploit the model for unauthorized access.

- Automated Vulnerability Discovery: Attackers use AI to quickly scan applications and uncover security weaknesses faster than traditional methods. This gives them a head start on finding and exploiting vulnerabilities before they can be fixed.

Impact of Adversarial AI on Application Security

Adversarial AI is creating significant new risks for web apps, mobile apps, APIs, and microservices. These are essential parts of modern application ecosystems, and adversarial AI gives attackers more advanced ways to target them. By manipulating inputs or injecting malicious data, adversarial AI can bypass traditional security layers and exploit often missed vulnerabilities.

This increases the chance of data breaches or unauthorized access. Solutions like Qwiet AI’s Autofix help by automatically detecting and fixing these vulnerabilities, giving organizations a proactive way to address security issues before they get worse.

One of the reasons adversarial AI is so dangerous is that it can evade traditional security tools that many organizations rely on. Tools like static analysis (which scans code for vulnerabilities before it’s run), dynamic analysis (which tests apps while they’re running), and runtime application self-protection (RASP, which detects and blocks threats during operation) are designed to catch known vulnerabilities and patterns.

However, adversarial AI works differently; it can manipulate inputs to make malicious behavior look normal, slipping past these defenses. It exploits weaknesses right in the code, often through complex interactions that these tools can’t detect, which makes it harder to block or prevent the attacks.

With adversarial AI becoming more advanced, there’s a real need for AI-driven security solutions to fight back. Traditional methods alone just can’t keep pace with the speed and sophistication of these threats. AI-powered security systems can learn from these evolving attack methods and adapt faster than manual processes. By using AI to combat AI, security teams can better defend applications, identify new attack patterns, and respond faster to protect against the next wave of threats.

Defensive Strategies Against Adversarial AI in AppSec

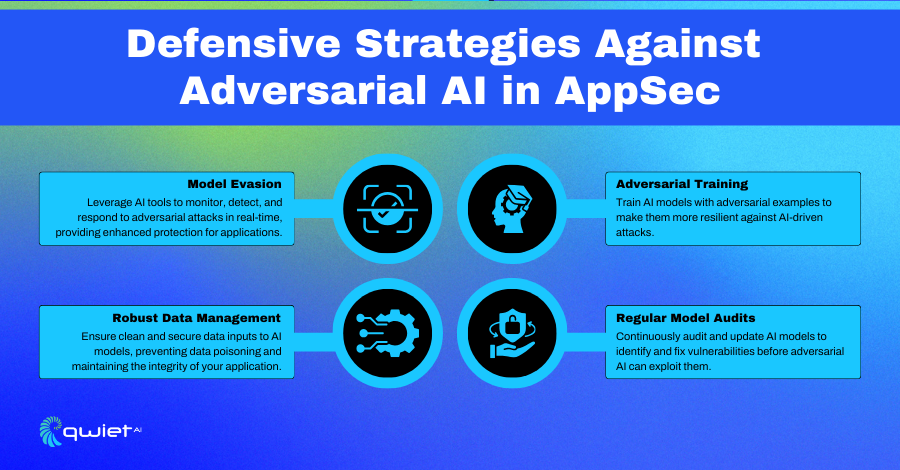

AI-Powered Security Solutions

More companies are turning to AI-powered security solutions to help detect and defend against adversarial attacks. These tools can analyze vast amounts of data quickly, identifying unusual patterns that may indicate an attack.

The advantage of AI-based security is that it continuously learns from emerging threats, becoming better at detecting sophisticated adversarial techniques over time. This enables organizations to respond faster and more accurately to potential breaches, strengthening their security posture.

Robust Data Management

Proper data management is crucial for preventing adversarial attacks, especially since many adversarial techniques rely on feeding manipulated or malicious data into AI models. To defend against this, companies must tightly control the data used in training and production environments.

This includes verifying the integrity of data sources, filtering out suspicious or abnormal data inputs, and securing data pipelines. The more secure the data, the harder attackers can corrupt AI models through data manipulation.

Adversarial Training

Adversarial training is a technique in which AI models are intentionally exposed to adversarial examples during training. These inputs are crafted specifically to trick the model, and by learning from them, the model becomes more resistant to future attacks.

This process strengthens the model’s ability to identify and reject malicious inputs in real-world scenarios. By incorporating adversarial training into the development cycle, companies can improve their AI models’ resilience against sophisticated attack strategies.

Regular Model Audits

Frequent audits of AI models are essential to maintaining security against adversarial threats. These audits involve reviewing the model’s performance, detecting potential vulnerabilities, and updating the model to adapt to new attack methods.

Since adversarial techniques evolve, regular evaluation helps ensure that AI models are continuously fortified against new threats. This ongoing assessment is key to ensuring that the AI systems remain secure and effective in the face of increasingly complex adversarial attacks.

Building a Resilient AI Defense in Application Security

A proactive approach to AI-driven security is essential for avoiding adversarial AI threats. One key measure is integrating AI-based security solutions early in your application lifecycle, allowing for real-time monitoring and threat detection.

Regular updates to these systems are just as important—attackers continuously evolve their techniques, so your AI defenses should evolve, too. Implement continuous testing and retraining of AI models to ensure they are resilient against adversarial inputs. Routine stress testing with adversarial examples can expose weak points before attackers find them.

Collaboration between security teams, developers, and AI experts is a practical way to close security gaps. Security teams can outline the specific threats and requirements, while developers ensure the secure integration of AI systems within applications.

AI experts bring deep insights into how models can be hardened against adversarial manipulation. You should also establish shared workflows where these teams continuously evaluate and fine-tune AI models as part of the CI/CD pipeline. Cross-team threat modeling sessions can also help anticipate adversarial attacks that target different parts of your application.

Government policies and industry standards provide important guidelines for securing AI in a structured way. Organizations should actively align with established frameworks like NIST’s AI Risk Management Framework or ISO/IEC standards, which offer detailed practices for developing and deploying AI securely.

Conclusion

Adversarial AI is reshaping the application security landscape with threats like model evasion, data poisoning, and automated vulnerability discovery. We’ve explored how AI-powered tools, strong data practices, adversarial training, and regular audits can help defend against these risks. As attacks grow more sophisticated, staying proactive is key. If you’re looking to boost your defenses, get in touch with Qwiet AI to see how we can help you detect and fix vulnerabilities before they’re exploited.

Read Next

No related posts.

About Qwiet AI

Qwiet AI empowers developers and AppSec teams to dramatically reduce risk by quickly finding and fixing the vulnerabilities most likely to reach their applications and ignoring reported vulnerabilities that pose little risk. Industry-leading accuracy allows developers to focus on security fixes that matter and improve code velocity while enabling AppSec engineers to shift security left.

A unified code security platform, Qwiet AI scans for attack context across custom code, APIs, OSS, containers, internal microservices, and first-party business logic by combining results of the company’s and Intelligent Software Composition Analysis (SCA). Using its unique graph database that combines code attributes and analyzes actual attack paths based on real application architecture, Qwiet AI then provides detailed guidance on risk remediation within existing development workflows and tooling. Teams that use Qwiet AI ship more secure code, faster. Backed by SYN Ventures, Bain Capital Ventures, Blackstone, Mayfield, Thomvest Ventures, and SineWave Ventures, Qwiet AI is based in Santa Clara, California. For information, visit: https://qwietdev.wpengine.com