Key Takeaways

- AI-generated code introduces security risks – Vulnerabilities, hardcoded credentials, and improper validation can be embedded in AI-assisted development.

- Training data biases affect security – AI models inherit flaws from open-source repositories, leading to insecure code recommendations.

- Proactive security measures are necessary – Human review, AI-driven scanning, and real-time threat detection help mitigate risks in AI-generated code.

Introduction

AI-powered coding tools like GitHub Copilot, OpenAI Codex, and Tabnine accelerate software development by automating code generation. While they improve productivity, they also introduce security risks, including vulnerabilities, biases, and maintainability issues. Studies show that AI-generated code can include exploitable flaws that spread across projects if left unchecked. This article will teach you about these risks, their impact on software security, and best practices for mitigating them.

Insecure Code Generation

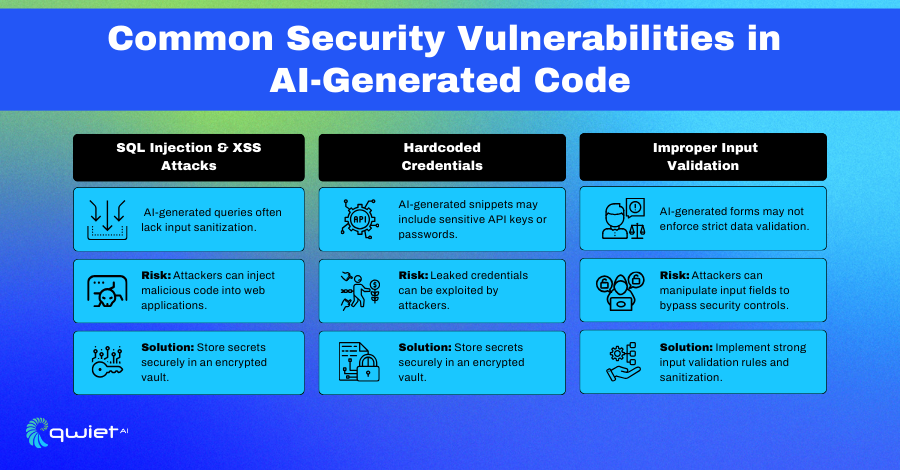

Common Security Vulnerabilities in AI-Generated Code

AI-generated code often introduces security vulnerabilities that can be exploited if left unchecked. Studies show that without proper validation, AI-assisted development leads to insecure coding practices that increase applications’ attack surface. These risks are amplified when developers assume AI-generated code is always secure without manual review.

- SQL Injection & XSS Attacks – AI-generated queries may lack proper sanitization, making applications vulnerable to injection-based attacks.

- Hardcoded Credentials – Some AI-generated snippets embed sensitive information directly in the code, increasing the risk of credential leaks.

- Improper Input Validation – AI models may not enforce strict data validation, allowing attackers to manipulate input fields and trigger unexpected behaviors.

Academic Insights & Empirical Evidence

AI-generated code introduces security risks because models learn from public repositories, many of which contain insecure patterns. Unlike human developers who apply security awareness, AI generates code based on probability, not intent, increasing the risk of vulnerabilities.

A 2022 study by Georgetown’s Center for Security and Emerging Technology (CSET) found that 40% of AI-generated code failed secure coding guidelines, frequently lacking input sanitization, proper authentication, and secure error handling. This shows that AI models replicate secure and insecure patterns without distinction, making unreviewed AI-generated code a potential security liability.

MIT’s 2023 research further revealed that developers using AI-assisted tools often overlook security flaws in generated code, assuming it follows best practices. Without manual review, vulnerabilities such as weak access controls and poor validation persist, increasing attack surfaces in production environments.

A joint study by Microsoft and OpenAI highlighted that AI models struggle with context-aware security, generating functionally correct but insecure code. Since AI does not inherently understand security implications, relying on it without verification can propagate vulnerabilities at scale, making oversight essential to prevent systemic security failures.

Vulnerabilities in AI Models & Training Data Risks

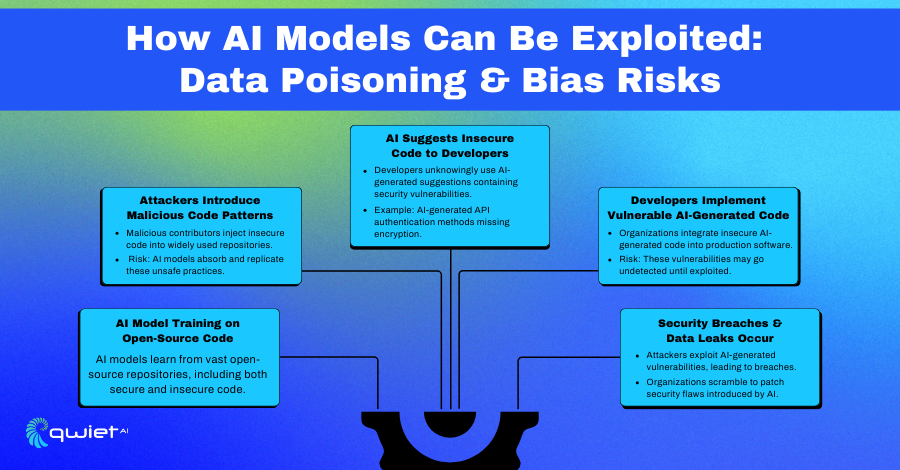

Data Poisoning & Model Manipulation

AI models trained on open-source codebases inherit both secure and insecure patterns. Attackers exploit this by injecting malicious code into widely used repositories, influencing AI-generated outputs. This technique, known as data poisoning, allows attackers to introduce insecure coding patterns that AI models recommend to developers.

An example is the introduction of insecure authentication methods into popular libraries. If trained on this tainted data, an AI model may generate code replicating these vulnerabilities. Over time, this propagates insecure code across multiple applications, making them easier targets for exploitation.

Biases in AI-Generated Code

AI-generated code reflects the biases present in its training data. If a dataset includes a history of insecure coding practices, the AI will treat these patterns as acceptable and continue suggesting them. This leads to reliance on outdated or vulnerable libraries, increasing the risk of security flaws.

Inconsistencies in coding styles also impact maintainability. AI-generated code often lacks uniform structure, making it harder to audit for security flaws. Since AI models do not inherently understand security best practices, they can unknowingly reinforce legacy vulnerabilities, passing them to new projects.

Microsoft’s research further highlighted how large language models (LLMs) struggle with context-aware security. Their study found that AI often produces functionally correct but insecure code, failing to enforce secure authentication, encryption, and access controls. Since AI cannot distinguish between secure and insecure patterns without additional validation, developers must actively review and refine AI-generated suggestions to avoid introducing security risks.

Downstream Cybersecurity Impacts

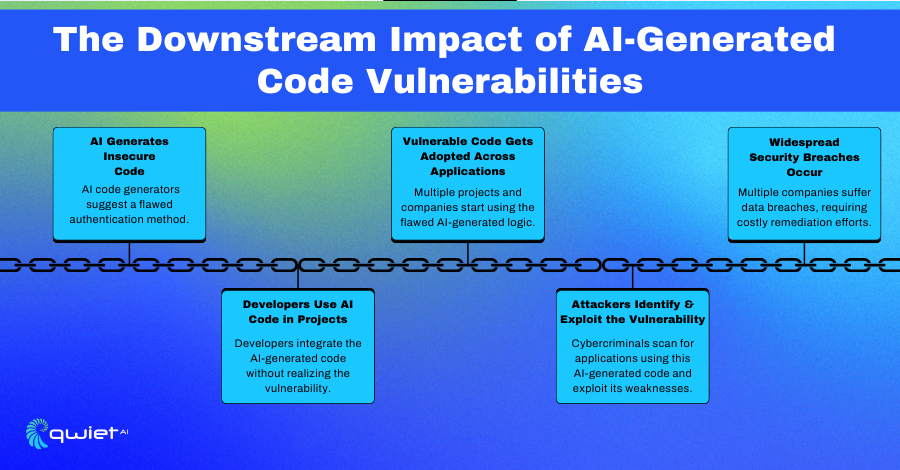

Propagation of AI-Generated Vulnerabilities

AI-generated code is widely integrated into software development, influencing security at every level. When vulnerabilities are introduced through AI-generated snippets, they don’t remain isolated they spread as developers unknowingly adopt them. This can make insecure coding patterns standard across multiple projects, compounding security risks.

One of the biggest concerns is the creation of vulnerability chains, where AI-generated components with security flaws are integrated into mission-critical applications. A single insecure function recommended by an AI coding assistant can find its way into production environments and be reused in various systems. Without strict oversight, these flaws persist, making applications more susceptible to exploitation at scale.

Feedback Loops & Reinforced Insecurity

AI models learn from existing repositories, absorbing patterns from both secure and insecure code. If vulnerabilities exist in the training data, AI will generate code that follows those same flawed practices. Over time, as AI-generated code becomes a larger part of software development, models may reinforce bad security habits by continuously training on past mistakes.

This issue is already evident in real-world testing. A 2023 study found that AI-generated boilerplate code for API authentication contained security weaknesses in 30% of cases. When widely adopted, insecure code creates a self-reinforcing loop where vulnerabilities become embedded in AI training datasets. This increases the difficulty of correcting systemic security flaws, making manual review and proactive security enforcement necessary to break the cycle.

Mitigation Strategies & Best Practices

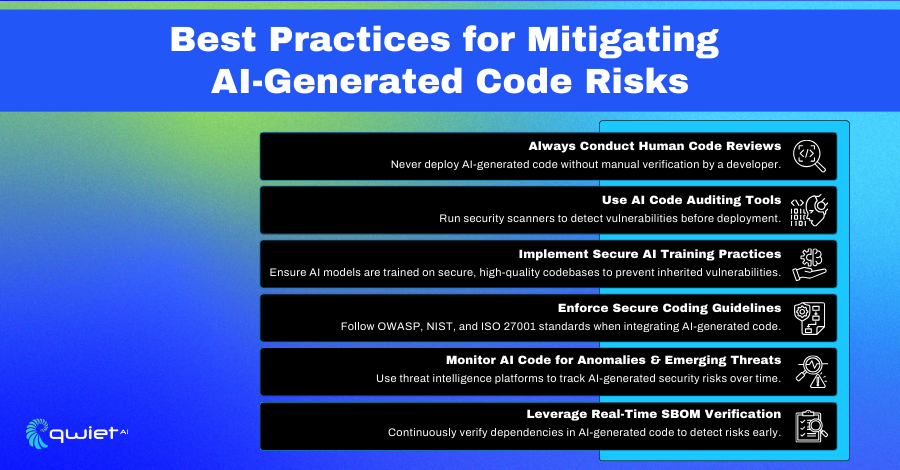

Improving AI Code Security

AI-generated code needs direct oversight to avoid introducing security risks into applications. Developers must review and verify AI-generated code before deployment to ensure that security flaws are not unintentionally introduced. While AI can accelerate development, it does not replace the need for human judgment, especially when handling authentication logic, input validation, and access controls.

Security scanning tools designed for AI-generated code help detect vulnerabilities before they become a threat. AI code auditing tools analyze generated snippets against known security patterns, identifying risks such as insecure dependencies, hardcoded credentials, or improper cryptographic implementations.

These tools act as safeguards, catching security flaws that might be overlooked in manual reviews. AI models should also be trained using secure coding principles, requiring vendors to integrate industry best practices into their training datasets. Models prioritizing security-aware training can reduce the likelihood of generating unsafe code, making AI-assisted development more reliable.

Proactive Risk Management in AI-Generated Code

Static security checks are not enough to manage AI-generated code at scale. Real-time SBOM (Software Bill of Materials) verification continuously monitors dependencies, ensuring that AI-generated software does not include outdated or vulnerable components. AI-generated code often pulls from open-source libraries, some of which may contain unpatched security flaws. Real-time verification tracks these dependencies, helping teams mitigate supply chain risks.

Secure coding frameworks provide a structured approach to reducing vulnerabilities in AI-assisted development. Standards like OWASP, NIST, and ISO 27001 establish guidelines for secure software practices, reducing the likelihood that AI-generated code introduces security gaps. Developers should enforce these standards consistently, integrating them into DevSecOps workflows to maintain high-security standards across AI-assisted projects.

AI-based security tools offer deeper insights into the behavior of AI-generated code. Code Property Graphs (CPGs) and AI-powered threat detection analyze real-time data flow, control structures, and dependencies. This method goes beyond traditional static analysis by identifying exploit paths that might not be obvious in conventional scans. Integrating these advanced detection techniques into security workflows allows teams to dynamically detect and remediate vulnerabilities in AI-generated code, reducing the risk of deploying insecure applications.

Conclusion

AI-generated code enhances development speed but also introduces security challenges. Without proper oversight, vulnerabilities and biases can spread across applications, increasing long-term risks. Security teams can reduce these risks by incorporating human review, AI-driven security scanning, and context-aware verification. Staying ahead of these challenges requires proactive security measures. Book a demo with Qwiet today to see how advanced threat detection can help secure AI-generated code.

FAQs

What are the security risks of AI-generated code?

AI-generated code can introduce vulnerabilities like SQL injection, cross-site scripting (XSS), hardcoded credentials, and weak input validation. Since AI models generate code based on patterns from existing repositories, they may replicate insecure coding practices. Attackers can exploit these flaws to gain unauthorized access, inject malicious payloads, or escalate application privileges.

How can AI-generated code lead to security flaws?

AI models are trained on publicly available code, including secure and insecure examples. If these models are not trained with security best practices, they may recommend outdated libraries, suggest weak authentication mechanisms, or fail to enforce input validation. Additionally, AI-generated code lacks real-world testing and may bypass security constraints that human developers typically implement.

How can developers secure AI-generated code?

Developers should manually review AI-generated code before integrating it into production, focusing on authentication mechanisms, data validation, and secure API interactions. Security auditing tools like static application security testing (SAST) and AI-specific vulnerability scanners can help detect risks. Adopting secure coding frameworks like OWASP, NIST, and ISO 27001 ensures that AI-assisted development follows industry security standards.

Can AI-generated code affect compliance?

Yes, AI-generated code can introduce risks that violate security and compliance frameworks such as GDPR, OWASP Top 10, PCI-DSS, and ISO 27001. Organizations may face regulatory penalties if AI-generated code exposes sensitive data, uses non-compliant cryptographic methods, or includes insecure dependencies. Continuous monitoring and real-time Software Bill of Materials (SBOM) verification help maintain compliance using AI-assisted development.

Read Next

No related posts.

About Qwiet AI

Qwiet AI empowers developers and AppSec teams to dramatically reduce risk by quickly finding and fixing the vulnerabilities most likely to reach their applications and ignoring reported vulnerabilities that pose little risk. Industry-leading accuracy allows developers to focus on security fixes that matter and improve code velocity while enabling AppSec engineers to shift security left.

A unified code security platform, Qwiet AI scans for attack context across custom code, APIs, OSS, containers, internal microservices, and first-party business logic by combining results of the company’s and Intelligent Software Composition Analysis (SCA). Using its unique graph database that combines code attributes and analyzes actual attack paths based on real application architecture, Qwiet AI then provides detailed guidance on risk remediation within existing development workflows and tooling. Teams that use Qwiet AI ship more secure code, faster. Backed by SYN Ventures, Bain Capital Ventures, Blackstone, Mayfield, Thomvest Ventures, and SineWave Ventures, Qwiet AI is based in Santa Clara, California. For information, visit: https://qwietdev.wpengine.com