Introduction

AI is playing an increasingly significant role in code security, with 83% of organizations already using AI to generate code. However, this rapid adoption comes with challenges—66% of security teams report struggling to keep up with AI-driven development speeds, leading to increased security risks. Moreover, 63% of security leaders believe it is almost impossible to govern AI use in development environments fully. This guide will explore how AI can improve security efforts, offering actionable steps to integrate AI into your strategy while managing the associated risks.

Who Should Use Code Security AI Assistance?

Identifying the right stakeholders for AI integration

Involving the right people from the beginning is important to make AI work in code security. Different teams play specific roles, and getting everyone on board early makes a huge difference.

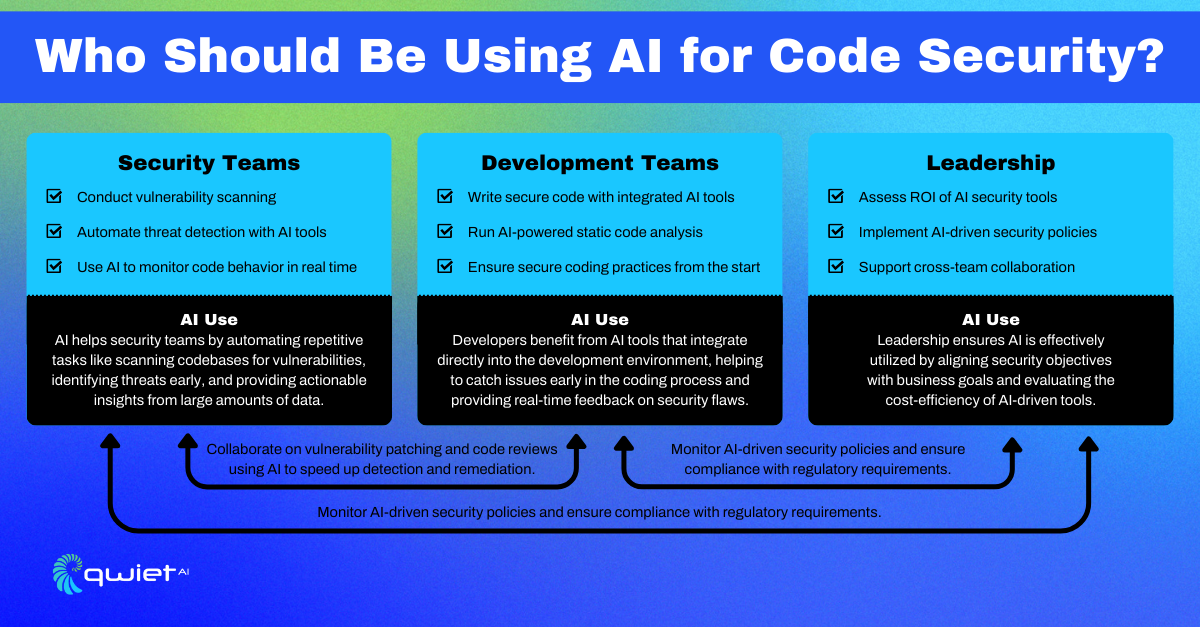

- Security teams: They can save time by automating tasks like vulnerability scanning, code audits, and policy enforcement. This lets them focus on higher-priority security challenges.

- Development teams: AI can be integrated into the Software Development Life Cycle (SDLC) to catch security flaws during coding, instead of waiting until later when fixes are more costly and time-consuming.

- C-suite and leadership: They’ll want to understand how AI can reduce security costs, speed up workflows, and scale security across large projects, helping the business achieve more secure code without breaking the budget.

Collaboration Across Teams

How AI Can Act as a Bridge Between Developers and Security Specialists

AI can be a game-changer in improving collaboration between developers and security teams. Developers often focus on shipping features quickly, while security teams focus on ensuring safe code. AI bridges this gap by automating repetitive security checks and providing real-time feedback during development.

For example, an AI tool can flag potential vulnerabilities as code is written, allowing developers to fix issues immediately without waiting for a later security review. This speeds up the process and reduces team friction, ensuring security is considered without slowing development.

Role of Cross-Functional Teams to Maximize AI Benefits

The key to getting the most out of AI in code security is building cross-functional teams that bring together developers and security professionals. These teams can work together to align how AI tools are set up and used. For example, they can agree on what AI tools should prioritize vulnerabilities or how AI insights should be integrated into the CI/CD pipelines.

Regular meetings between these groups allow for quick feedback and adjustments, ensuring AI is not just another tool but actively helping both teams achieve their goals. This collaboration also helps keep AI tools fine-tuned to the challenges, making them more effective in practice.

When Should You Use AI for Code Security?

Proactive vs. Reactive Uses

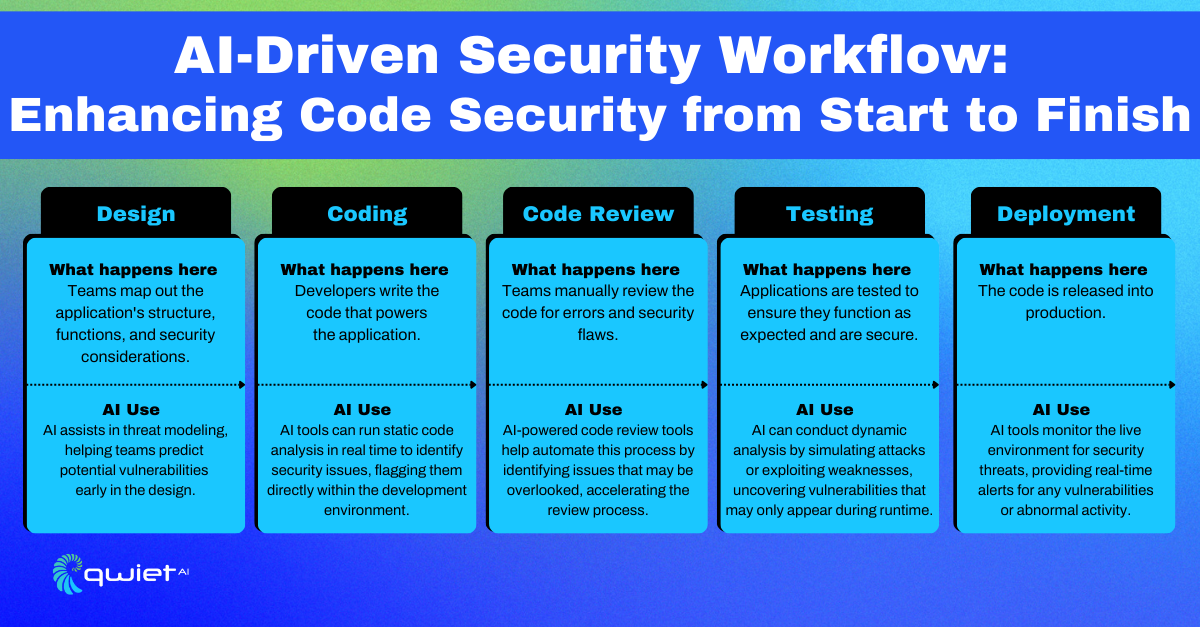

AI can be a powerful tool when integrated early in the development process, during the design and coding stages. This is often referred to as shift-left security, where security is considered right from the start rather than waiting until later stages. Using AI at these early stages can identify potential vulnerabilities as code is being written, making it easier and faster to address issues before they become bigger problems.

AI also plays a valuable role after an incident has occurred. In incident response and post-breach analysis, AI can quickly sift through logs and system data to identify the source of an attack, how it unfolded, and what vulnerabilities were exploited. This speeds up the investigation process and helps teams respond more effectively, minimizing the damage and helping to prevent similar issues in the future.

Where AI Can Helps in AppSec

Code Reviews

AI can significantly speed up code reviews by automating much of the manual work. It can scan for known vulnerabilities, insecure coding practices and even detect logic errors.

This accelerates the review process and increases accuracy, allowing teams to catch issues that might be missed in a traditional manual review. With AI assisting in code reviews, developers can spend less time reviewing line-by-line and focus more on refining and improving their code.

Threat Modeling

Threat modeling is critical in understanding where your code might be vulnerable. AI enhances this process by analyzing large datasets, previous attack patterns, and system architecture to predict potential threats.

It can help identify weaknesses that might not be immediately obvious to human analysts, allowing teams to prioritize security measures more effectively and anticipate risks before they are exploited.

Penetration Testing

Penetration testing is often labor-intensive and requires specialized expertise. AI can assist by automating parts of the testing process, running simulations, and providing insights based on real-world attack data.

While AI won’t replace human-driven pen tests, it enhances them by offering additional insights and helping to identify weak points faster. This combination allows for more thorough testing and ensures potential vulnerabilities are caught before being exploited.

Real-Time Monitoring

AI excels in real-time monitoring by continuously scanning systems for unusual behavior, anomalies, or zero-day vulnerabilities that haven’t been previously identified. This is particularly valuable in detecting threats that traditional security measures might miss.

AI tools can quickly identify and alert teams to suspicious activity, allowing them to respond before a full-blown attack occurs. This kind of proactive monitoring strengthens security without requiring constant manual oversight.

Post-Release Updates

Even after code has been deployed, AI plays a crucial role in ongoing security. AI-powered tools can continuously scan production systems for vulnerabilities that might emerge post-release. This helps teams avoid new threats and quickly patch any security gaps.

By integrating AI into post-release scanning, organizations can maintain a higher level of security throughout the software lifecycle, ensuring that updates are safe and reliable.

How Should You Govern AI Use in Code Security?

Defining Governance Frameworks for AI

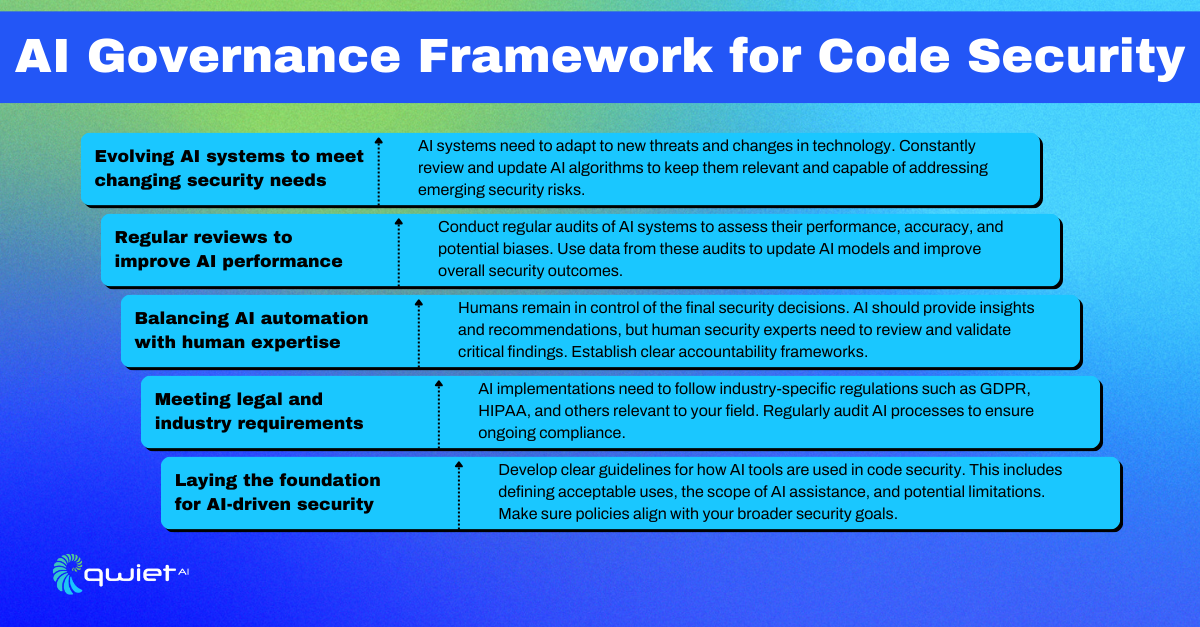

When introducing AI into code security, clear policies and standards must be established. These guidelines should define how AI will be deployed and managed in a way that aligns with your organization’s ethical principles and security needs.

This means setting clear rules on data usage and decision-making processes and maintaining fairness in AI-generated outcomes. Having these policies in place helps set expectations and keeps AI use consistent and controlled.

AI-driven security tools need to work within the bounds of legal and industry-specific regulations like GDPR, HIPAA, or other relevant frameworks. This requires aligning your AI security practices with these regulations to protect sensitive data and avoid legal risks.

Regularly reviewing AI tools to confirm they meet these requirements is essential, as non-compliance can lead to serious consequences for the organization, both financially and reputationally.

To maintain trust in AI systems, it’s important to have audit trails and accountability mechanisms in place. This involves tracking AI decisions and documenting how AI models make recommendations or flag vulnerabilities.

Regular audits help you stay aware of what the AI is doing and provide transparency in case something goes wrong. It also helps validate that the AI continues performing effectively and fairly.

AI Decision-Making Limits and Human Oversight

One of the key governance decisions is understanding when AI can act independently and when human intervention is necessary. AI can handle tasks like detecting common vulnerabilities or automating routine code reviews efficiently on its own.

However, more complex decisions—like evaluating high-risk security incidents or deciding on major code changes—still require human oversight. Striking the right balance helps maintain control while leveraging AI’s speed and precision.

While powerful, AI is imperfect and can sometimes introduce biases or errors into the security process. Over-reliance on AI without regular human review can result in overlooked vulnerabilities or skewed recommendations. Regularly assessing AI outputs and combining them with human expertise helps catch these potential pitfalls and minimizes the risk of AI missteps. This approach ensures AI remains a helpful tool, not a crutch.

Training and Upskilling Teams

To get the most out of AI in code security, it’s important that both security and development teams fully understand how to use AI tools effectively. This means providing them with training on how the AI works, what data it uses, and how to interpret its outputs. Practical, hands-on learning is key here, allowing teams to get comfortable with integrating AI into their daily workflows.

AI tools are constantly evolving, and so should your teams. Regular training and upskilling are necessary as AI capabilities advance. Keeping teams up-to-date on the latest AI features and improvements helps them use these tools effectively. This ongoing learning process also allows for better collaboration between teams as they learn to trust and refine the AI systems they work with, keeping security efforts sharp and adaptable.

Implementing AI in Code Security

Step 1: Assessment and Strategy

Before diving into AI adoption for code security, take a step back and assess your organization’s current readiness. This includes evaluating your existing security processes, the team’s familiarity with AI, and the maturity of your development and security pipelines.

It’s important to understand where AI can fill gaps and where it might meet resistance. From there, AI use should be aligned with business goals and security priorities. AI should complement your existing strategies and make your security efforts more efficient and scalable.

Step 2: Choosing the Right AI Tools

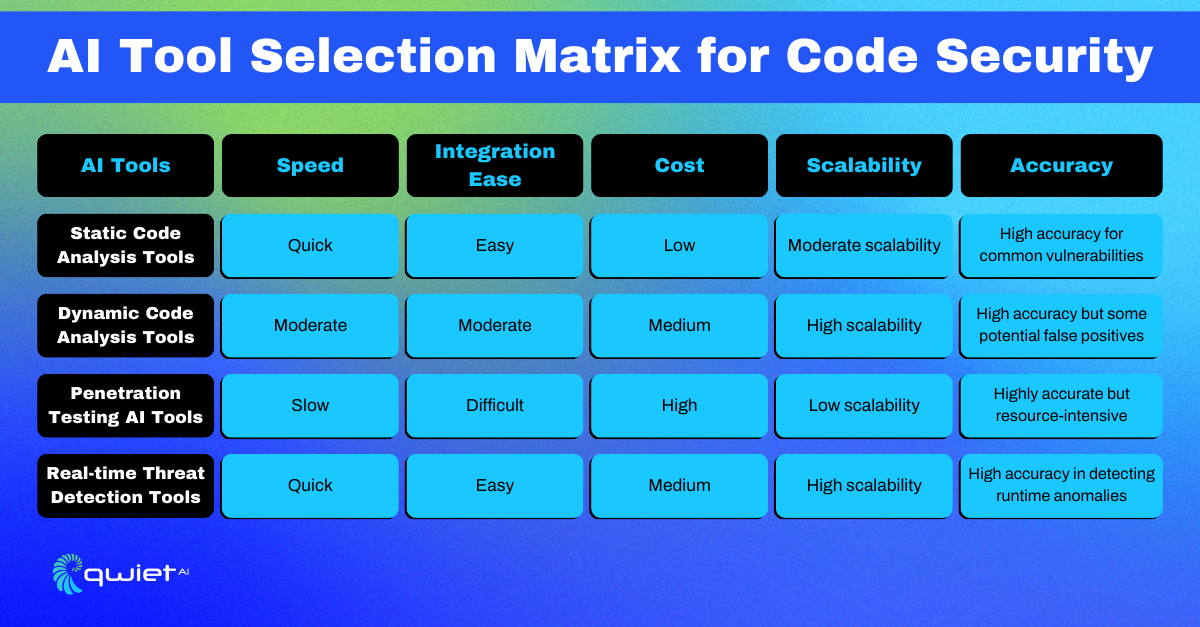

Several factors need to be considered when selecting AI tools. Based on budget, flexibility, and available support, decide whether open-source or commercial tools better suit your organization’s needs.

Scalability is another key aspect—your AI tools should be able to grow with your business. It’s also crucial that these tools integrate seamlessly with your current systems, such as CI/CD pipelines, DevOps, or SecOps platforms. Popular AI tools for code security often include static analysis tools for scanning code before it runs and dynamic analysis tools for testing applications in real-time.

Step 3: Integration into Development Pipelines

Embedding AI-driven security checks into your CI/CD pipeline is a practical way to streamline security without adding friction to the development process. This can be done by incorporating AI tools directly into the build and test phases, where they can automatically scan for vulnerabilities and potential issues in real time.

The goal is to make security checks part of the natural development flow so that neither developers nor security teams are slowed down. To achieve this, focus on integrating AI with your existing DevOps and SecOps workflows, allowing seamless communication between development and security teams.

Step 4: Monitoring and Continuous Improvement

Once AI is in place, it’s important to measure its performance regularly. Set clear KPIs to track the number of false positives, detection speed, and the accuracy of remediation recommendations.

Regular audits of AI models should ensure they stay relevant as the codebase and threats evolve. Gathering feedback from both development and security teams helps you refine and update AI tools to meet your needs better. Continuous improvement keeps the AI-driven security process aligned with your goals and evolving risks.

AI Challenges and Pitfalls in Code Security

Managing False Positives and False Negatives

One of the main challenges with AI in code security is finding the right balance between precision and productivity. If the AI generates too many false positives, developers will waste time reviewing non-issues, leading to frustration and slowing down their workflow.

On the other hand, false negatives—missed vulnerabilities—can be even more dangerous as they expose your system. The key is to fine-tune AI models to minimize both so security teams can trust the alerts and act efficiently without unnecessary distractions.

AI Bias and Ethical Considerations

AI algorithms can sometimes introduce biases that may overlook certain vulnerabilities or, conversely, flag others too aggressively. This could happen due to how the models are trained or the data they rely on.

It’s important to regularly evaluate the AI’s performance to ensure it’s not favoring certain patterns over others. Regular testing, diverse training data, and periodic updates help mitigate these biases, ensuring a fair and balanced approach to identifying risks across all areas of the codebase.

Over-Reliance on AI

AI is a powerful tool, but it should be seen as something other than a replacement for human expertise. While AI can handle routine tasks quickly and efficiently, complex security decisions still require the judgment of experienced security professionals.

Human oversight is crucial in areas where AI might struggle with nuance, such as assessing the full context of a potential vulnerability. AI should complement, not replace, the skills and insights that security teams bring.

Ensuring Human-AI Collaboration

For AI to be truly effective, collaboration between humans and AI needs to be smooth and well-coordinated. Developers and security teams should view AI as a helpful assistant that streamlines workflows but still requires their input and decision-making.

Regular feedback loops between humans and AI tools are important, as this helps the AI system improve while allowing the teams to adjust based on real-world use. This partnership maximizes the benefits of AI while keeping the human touch where it’s needed most.

Conclusion

AI reshapes code security by automating code reviews, real-time monitoring, and vulnerability scanning tasks. It helps teams work faster and smarter, but balancing AI’s capabilities with human oversight is important. By building strong governance and aligning teams, you can make the most of AI while staying flexible as threats evolve. If you’re ready to explore how AI can fit into your security strategy, book a call with Qwiet to get started.

Read Next

No related posts.

About Qwiet AI

Qwiet AI empowers developers and AppSec teams to dramatically reduce risk by quickly finding and fixing the vulnerabilities most likely to reach their applications and ignoring reported vulnerabilities that pose little risk. Industry-leading accuracy allows developers to focus on security fixes that matter and improve code velocity while enabling AppSec engineers to shift security left.

A unified code security platform, Qwiet AI scans for attack context across custom code, APIs, OSS, containers, internal microservices, and first-party business logic by combining results of the company’s and Intelligent Software Composition Analysis (SCA). Using its unique graph database that combines code attributes and analyzes actual attack paths based on real application architecture, Qwiet AI then provides detailed guidance on risk remediation within existing development workflows and tooling. Teams that use Qwiet AI ship more secure code, faster. Backed by SYN Ventures, Bain Capital Ventures, Blackstone, Mayfield, Thomvest Ventures, and SineWave Ventures, Qwiet AI is based in Santa Clara, California. For information, visit: https://qwietdev.wpengine.com