Introduction

As AI and automation reshape industries, application security (AppSec) rapidly evolves from systems that support analysts to those that can function independently. This post walks you through the stages of autonomous AppSec, showing how AI-driven systems change how security is managed. You’ll discover how the technology works at each level of automation and what to expect as we move toward fully autonomous security solutions.

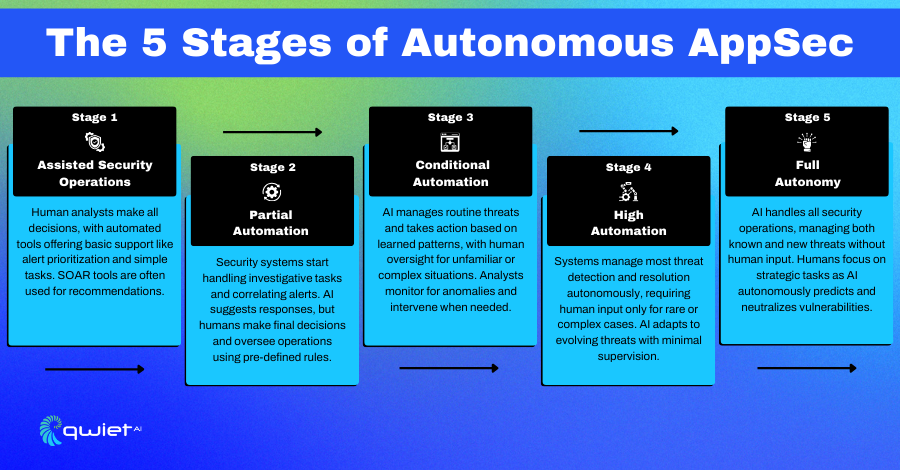

Stage 1: Manual and Assisted Security Operations

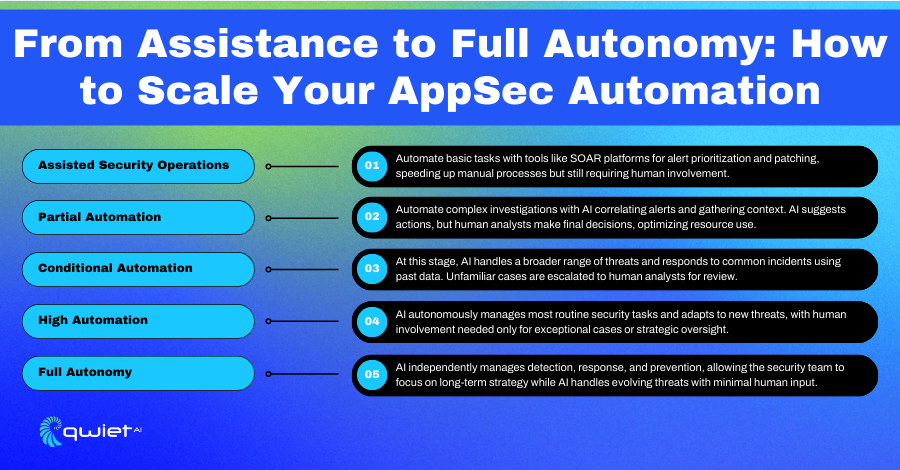

In the early days of security automation, tools were created to make things easier for analysts by handling some of the repetitive tasks. These tools help sort alerts, organize threat data, and simplify daily routines. They’re useful but not entirely independent—the analyst is still in charge, using the tools to speed things up and make smarter decisions.

One example is using SOAR (Security Orchestration, Automation, and Response) platforms. These systems can automatically gather threat intelligence, manage patches, and enrich data without needing constant input. They help reduce the busy work, giving analysts more time to focus on complex tasks that need human thinking. But even though these systems are helpful, they can’t operate without oversight from people.

The technology is fairly basic at this stage—mostly scripts, automation tools, and AI-supported threat detection. While they can flag problems and suggest solutions, they still need someone to step in and confirm what to do next. Human judgment is critical here, especially when new or unexpected threats appear.

Take something as simple as autofill features, for example. These tools can quickly fill out forms or suggest the next steps in a security process, saving time. Analysts are still making the final call, but they’re not running the show. It’s like having a helpful assistant, not a replacement. The tools care for the routine, freeing up time and headspace for the more important decisions only a person can make.

Stage 2: Partial Automation

At this stage, AI takes on more responsibility by automating tasks like pulling together alerts from different sources and spotting possible threats. Managing the day-to-day workload is a big help, but human oversight is still important when making key decisions. The AI can handle many of the routine stuff, but analysts are still in charge when things get tricky.

The AI works by suggesting responses based on set workflows. It analyzes the situation, spots patterns, and recommends actions. While this speeds things up, the final call still belongs to the human analysts. They review the AI’s suggestions and decide what needs to be done based on the specific context.

The main technologies at this stage include AI-driven threat correlation, which connects different pieces of data to identify risks. AI also gathers important contexts to help analysts understand the full picture. Predefined response plans help standardize how certain threats are handled, making the process faster and more efficient.

For example, when a threat is detected, the AI might suggest steps to respond based on what it’s learned from previous incidents. This can save time, but the human team still steps in when the situation is more complex or needs a different approach. It’s a team effort between the AI and the analysts, helping them manage more threats without sacrificing accuracy or control.

Stage 3: Conditional Automation

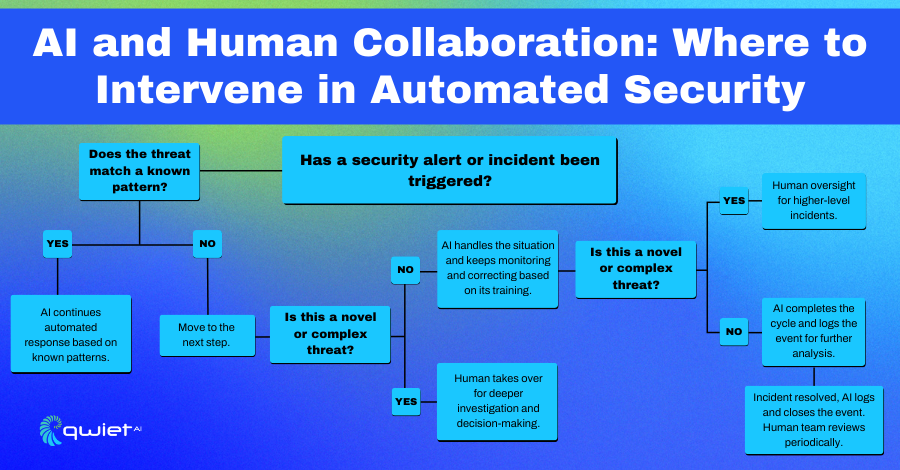

In this phase, AI takes on even more responsibility by handling the full threat detection and response cycle, but only under specific conditions. The system is designed to manage routine threats independently, from identifying them to taking the necessary actions. However, when a new or unrecognized threat falls outside its programmed parameters, it will pass control back to human analysts for deeper investigation.

The key technologies driving this level of automation are deep learning, large language models (LLMs), and generative AI. These tools allow the system to learn and improve from vast amounts of data continuously. They help the AI more efficiently recognize and manage familiar threats without human intervention. For routine tasks like phishing detection, these systems can run independently, saving analysts from dealing with the same repetitive issues over and over.

In practice, the AI might handle common phishing attacks automatically, blocking malicious emails and taking preventive actions. However, it escalates the issue to a human analyst when it encounters something more complex or unknown, such as a new type of malware or a sophisticated cyberattack. This way, the AI handles the predictable, freeing humans to focus on the unexpected or more challenging threats.

Stage 4: High Automation

AI handles almost all security tasks in this stage independently, only needing human help in rare, unexpected situations. The system handles everything, from detecting threats to resolving incidents, so security teams can focus on more unusual or complex problems.

The technology behind this includes advanced AI platforms that work in real time. These systems constantly monitor for threats, fix vulnerabilities, and respond to issues with little human input. They take on tasks like threat detection, patching, and incident resolution, making the whole process smoother and faster.

With AI running the entire security process, routine tasks like scanning for vulnerabilities, applying patches, and managing threats are done automatically. This frees security teams to step in only when something unusual comes up.

Stage 5: Full Autonomy in Security Operations

AppSec systems are fully autonomous at this stage, handling security across applications without human input. These systems can automatically detect, respond to, and resolve security vulnerabilities and attacks across all applications and environments. Human involvement is no longer needed for routine operations, as the AI manages everything independently.

The technology behind this includes advanced AI systems that continuously learn from new security data and adapt to emerging threats. These AI-driven AppSec platforms don’t just rely on predefined rules—they evolve by analyzing patterns in real-time, allowing them to counter novel vulnerabilities and attacks without needing updates from human analysts. The system becomes smarter over time, refining its ability to protect applications from new threats.

In the future, fully autonomous AppSec systems will able to predict security risks before they occur, preemptively identifying and neutralizing vulnerabilities in code or application configurations. These systems would be capable of managing the entire security lifecycle, from vulnerability detection to patching and threat mitigation, freeing up security teams to focus on high-level strategies rather than reacting to ongoing threats.

Challenges and Ethical Considerations

One of the major concerns with relying heavily on AI in security is the risk of undetected biases within the systems. AI models learn from data, and if that data contains biases, it can affect how the AI responds to certain threats.

There’s also the issue of adversarial attacks, where malicious actors manipulate AI models to exploit weaknesses. While AI can handle many tasks, relying too much without regular human oversight could lead to missed threats or unintended security gaps.

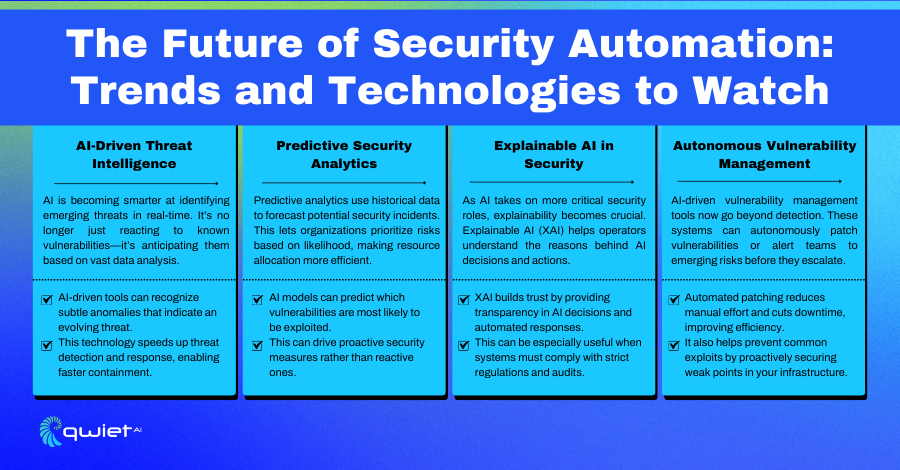

Transparency and explainability are crucial when using autonomous systems. Security teams need to understand how AI makes decisions, especially regarding threat detection and response. Trust in these systems grows when analysts see what the AI is doing and why it makes certain decisions. This kind of visibility is vital for maintaining confidence in automated security systems and for being able to intervene when necessary.

As AI advances in security, we must consider regulatory frameworks similar to those used in other sectors, like autonomous driving. Regulations guide how AI in security is implemented, focusing on safety, accountability, and ethical usage.

Standards and compliance frameworks will likely emerge regarding the proper testing of AI models and the requirements for monitoring and adjusting them to prevent misuse or unintended outcomes.

The Road Ahead for Autonomous AppSec

The future of AppSec is moving toward fully autonomous systems that go beyond assisting human analysts. Right now, AI tools help manage tasks and reduce the manual workload.

Still, as technology evolves, we can expect a shift where AI handles the entire security process from detection to response without human intervention. This would free up security teams to focus on strategy and more complex, critical issues while AI takes care of routine and even some advanced operations.

One of the most exciting developments on the horizon is AI, which can learn and adapt in real time, making decisions based on new data without needing constant human updates. These systems would be able to stay ahead of threats, evolving as new vulnerabilities emerge.

At the same time, integrating explainability into AI systems will be key to maintaining trust. As AI handles more security tasks, it will become important for these systems to show clear reasoning behind their decisions, allowing teams to understand better and trust the automation they rely on.

Conclusion

We’ve explored the journey from manual to fully autonomous AppSec, looking at how AI can take over tasks like threat detection and response with minimal human input. As security becomes more automated, transparency and trust in AI systems will be essential. If you’re ready to explore how Qwiet AI can help you embrace AI in AppSec, book a call with us today to see how Qwiet can help..

Read Next

Patch Management Overview

What is Patch Management? Patch management is the systematic practice of identifying, testing, and applying updates, commonly known as patches, to software components. These patches can target operating systems, applications, or firmware and are often released to fix vulnerabilities, resolve bugs, or optimize performance. The patching process starts with scanning and inventorying all systems to […]

Salt Typhoon’s Impact: Could AI-Powered AppSec Have...

Key Takeaways AI Could Have Altered the Salt Typhoon Attack: AI-driven solutions, such as real-time anomaly detection and proactive vulnerability identification, would have identified and blocked threats before they escalated. Supply Chain Visibility is Essential: AI-enhanced SBOMs continuously monitor third-party components, highlighting outdated or vulnerable dependencies that attackers, like Salt Typhoon, exploit. Integrated Security Strengthens […]

Security Leaders’ Guide to Code Security AI Assista...

Introduction AI is playing an increasingly significant role in code security, with 83% of organizations already using AI to generate code. However, this rapid adoption comes with challenges—66% of security teams report struggling to keep up with AI-driven development speeds, leading to increased security risks. Moreover, 63% of security leaders believe it is almost impossible […]