Key Takeaways

- Agentic AI brings context and reasoning to security, helping tools detect vulnerabilities and whether they’re exploitable based on code behavior.

- Contextual reachability filters out noise by mapping real data and control flow, giving developers clear and actionable findings.

- Qwiet AI’s CPG and agentic model support precision and automation, aligning AppSec with the realities of fast-moving development environments.

Introduction

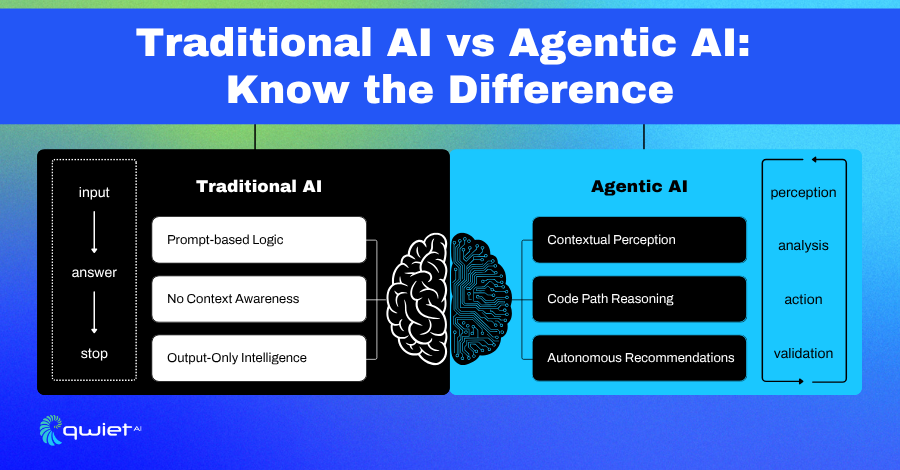

AI in security tooling has moved far beyond simple detection. While traditional methods like static analysis, signature-based scanning, and prompt-driven LLMs have helped surface known risks, they often miss context, over-report noise, and fail to connect findings to actual application behavior. Agentic AI changes that. It brings reasoning, path analysis, and autonomy to security, mapping out how vulnerabilities could realistically be exploited in your environment. Contextual Reachability is where findings are linked to actual data flow and execution paths. In this article, you will learn how this model reshapes detection, reduces noise, and gives developers actionable, prioritized results.

What Is Contextual Reachability?

Focused Detection with Real-World Relevance

Contextual reachability involves determining whether a vulnerability can be triggered based on how your code is structured and executed. It goes beyond just detecting insecure functions or patterns. It maps how data flows, which branches are reachable, and whether untrusted input can reach a sensitive operation under real-world conditions.

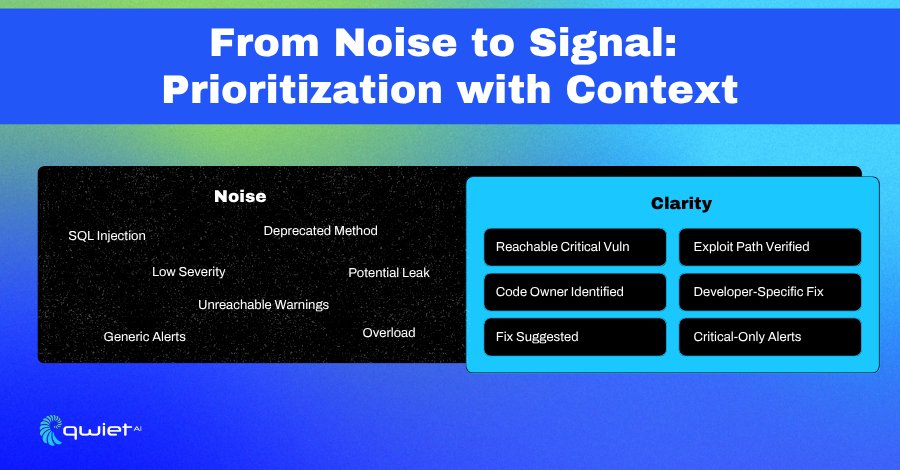

This kind of analysis focuses on security findings. It filters out results that exist in unreachable code paths or are gated by logic that makes exploitation impossible. That matters because time spent chasing theoretical problems distracts from addressing the ones that could directly impact users or systems.

The findings shift from generic warnings to meaningful insight when reachability is factored into detection. Fewer alerts are generated, and those alerts carry more weight because they reflect exploitable paths, not just potential concerns.

Precision Over Pattern Matching

Static scanners are good at identifying syntax-level risks but rarely offer context. They report potential issues without indicating whether they are likely to occur. Contextual reachability is different. It traces the actual execution flow and data movement through your application to determine if the risk is real.

This requires more than looking at a single file or method in isolation. It means analyzing the relationships between functions, the conditions under which they run, and whether they’re tied to user input that hasn’t been sanitized or validated. It’s about connecting the pieces, not just spotting them.

When a tool understands execution paths, it can highlight a vulnerability and also show exactly how the exploit would work, where the data enters, how it moves, and where it lands. That clarity helps you fix the right things, faster.

Less Guesswork, More Clarity

Unprioritized security findings lead to noise, and noise slows everything down. Developers have limited cycles to review and address issues, and most security tools inundate them with alerts that lack context. That kind of output burns time and erodes trust in the tools that produce it.

Contextual reachability addresses this by filtering out unreachable or low-impact findings. It surfaces the exposed and actionable vulnerabilities based on how the code is wired. This shifts security from static review to dynamic understanding.

When your security tooling shows where the vulnerability is and how it can be triggered, the response becomes sharper. Developers get clear signals, not loose suggestions. That difference is what helps engineering teams move quickly without ignoring real risk.

Why Agentic AI Matters

AI That Understands Code Behavior

Agentic AI is designed to function like an autonomous system that parses code and analyzes its structure and behavior. It doesn’t just return results; it maintains a state, evaluates context, and determines whether something is worth surfacing. This means tracking how input flows through functions, conditions impact execution, and whether specific code paths are reachable under runtime logic.

Most LLMs are reactive. You give them a prompt, and they generate a response. However, they fail to maintain a persistent state, map relationships between functions, or make real-time decisions based on control and data flow.

Agentic AI operates differently. It builds a model of the application as a whole, linking data inputs to outputs, understanding scope, and identifying which code regions are isolated versus exposed. That depth makes it possible to detect insecure code and understand its relevance in a real-world context.

How Qwiet AI Applies It

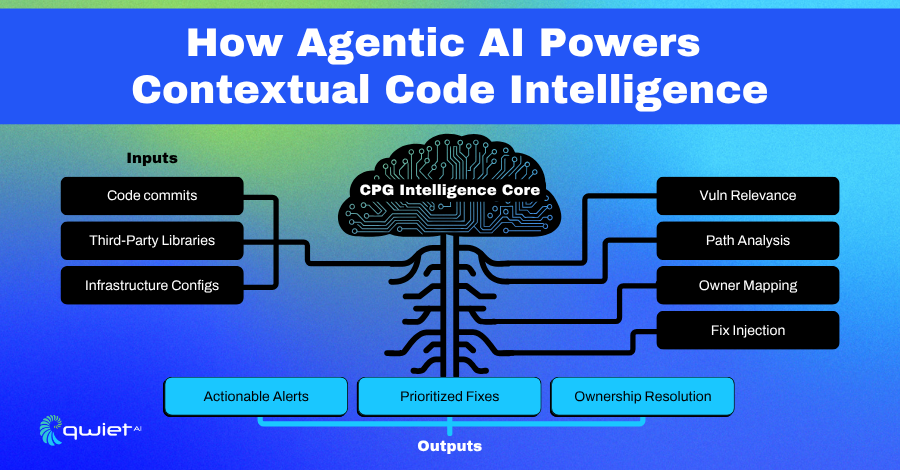

Qwiet AI’s agentic model goes deeper than surface-level scanning. It builds and queries a Code Property Graph (CPG), combining abstract syntax trees, control flow, and data flow into a unified application view. This lets the system reason across codebases, even when vulnerabilities span multiple files, modules, or microservices. It can trace whether a user-supplied input ever reaches a sensitive function, and if so, under what conditions.

It also tracks code ownership and responsibility. When a finding is discovered, the model can correlate it to the service or team most likely to maintain that part of the codebase.

This isn’t just about pointing out a problem; it connects that problem to the right people, providing enough context for them to act on it. Rather than asking developers to sift through raw scan output, Qwiet AI delivers focused, high-confidence findings that reflect how the system behaves, not just how it looks in a static snapshot.

The Developer Benefit: Less Noise, More Signal

Making Security Actionable

Most developers want to ship secure code, but it becomes harder when the tools throw every possible issue into the mix without indicating what’s dangerous. When everything looks like a potential problem, it’s hard to know where to start. This leads to stalls, missed priorities, and a growing backlog of alerts that may or may not be important.

Contextual reachability changes that. Instead of reporting every theoretical vulnerability, it focuses on what is exploitable. It cuts through static matches and surfaces what can be triggered in real code paths. That difference reduces the mental load during triage. It allows engineers to focus on the things that present real risk, not hypothetical edge cases that live behind dead branches or unreachable conditions.

Less Friction, More Clarity

Security tools that flood teams with raw findings slow everything down. When results aren’t prioritized, teams waste hours investigating or ignore the output entirely. Over time, that adds to alert fatigue and builds friction between development and security teams; nobody wins in that model.

Qwiet AI is designed to reduce that friction. Its agentic model doesn’t just identify the issue; it explains why it matters, where it can be reached, and how it fits into the overall execution path. The goal is clarity: fewer false positives, clearer impact, and a direct path to action. Instead of giving you a list of bugs, it tells you what to fix first and provides the context to fix it correctly the first time.

Operationalizing CPG and Agentic AI

How Contextual Reachability Runs Inside the Graph

Qwiet AI’s analysis starts by converting your application into a Code Property Graph (CPG), a unified model that merges abstract syntax, control flow, and data flow. This graph captures how different parts of the codebase interact, including across files, classes, and services. Every variable assignment, function call, and branching decision becomes part of a larger queryable structure.

From there, agentic AI analyzes these relationships to identify paths that matter from a security standpoint. It tracks untrusted input sources, observes how data flows through sanitization or control checks, and determines whether that data can reach known dangerous sinks. It doesn’t stop at finding the issue; it connects conditions, ownership, and context to help explain why it matters.

The output is a graph-backed result with proof of exploitability. Each finding is supported by a path showing how data flows from entry to sink, what filters (if any) are applied, and where a developer needs to intervene. That data is precise and tied to real execution logic, not speculation or heuristics.

Applying Intelligence Across the Lifecycle

Once this intelligence is integrated into the pipeline, it reshapes how scanning, triage, and remediation are conducted. Instead of running flat scans that dump unfiltered results, Qwiet prioritizes based on reachability. It knows which vulnerabilities are just noise and which pose an actual threat, and that distinction makes all the difference during reviews and CI/CD gates.

When a finding appears, the AI engine can trace it back to the responsible code owner, highlight the part of the graph where data validation is missing, and surface it with a severity score grounded in context.

Based on actual flow analysis, developers can see not only what to fix but also why that fix matters. That clarity speeds up decision-making and reduces the need for rework.

Recommendations aren’t static either. The system adjusts according to the application’s evolution. If a code path is refactored or new logic blocks data flow, the finding gets reprioritized or dropped. This constant recalibration helps teams stay aligned with real risk, not stale scan output.

What’s Next: Intelligence That Closes the Loop

Looking ahead, the fusion of CPG, agentic reasoning, and developer context unlocks automation that detects issues and closes them out.

With a detailed understanding of data flow, control logic, and the code’s ownership structure, the model can begin to suggest where the problem lies and how to fix it, tailored to the specific project’s style and architecture.

There’s a clear path toward automated remediation that doesn’t operate in a vacuum. It accounts for reachability, avoids regressions, and proposes changes that align with the codebase’s development approach. These aren’t generic patches; they’re grounded in real structural analysis.

That kind of forward movement is how security can finally keep pace with modern development. As engineering velocity grows, having intelligent systems that adapt in real time, surface meaningful work, and streamline fixes gives teams more space to build without leaving risk behind.

Conclusion

Agentic AI and contextual reachability aren’t theoretical concepts; they’re working models that provide real insight into how modern applications can be secured. They move security forward by surfacing the vulnerabilities that matter and showing exactly how they can be reached. Teams using this approach can skip the noise, reduce wasted triage, and shift security left without slowing down development. If you’re ready to see what that looks like in your environment, book a demo and explore how Qwiet AI can help your team focus on signal, not static.

FAQs

What is agentic AI in application security?

Agentic AI is an autonomous system that can reason through code structure, track data flow, and make context-based decisions. Application security goes beyond simple detection and actively evaluates whether vulnerabilities are exploitable based on how the code behaves at runtime.

How is contextual reachability different from static scanning?

Contextual reachability analyzes whether a vulnerability is truly accessible during execution. Unlike static scanners that flag patterns without assessing control or data flow, this approach identifies actual exploit paths, reducing false positives and improving prioritization.

Why do developers prefer tools with contextual reachability?

Tools that support contextual reachability reduce alert fatigue by showing developers only the reachable and exploitable issues. This leads to faster triage, better focus, and fewer wasted cycles chasing non-issues.

How does Qwiet AI use agentic models for better detection?

Qwiet AI builds an application’s Code Property Graph (CPG), combining syntax, control, and data flow. Its agentic engine analyzes this graph to find vulnerabilities with real-world impact, helping teams focus on the findings that matter most.

Can agentic AI support automated remediation in the future?

Yes. With a deep structural understanding and ownership mapping, agentic AI can propose context-aware fixes tailored to the code’s structure, laying the foundation for automated, risk-aware remediation that aligns with development speed.