Key Takeaways

- False negatives pose a significant hidden risk by allowing real vulnerabilities to slip through security scans undetected, leaving systems exposed without raising alerts.

- Technical limitations, changing environments, and tool trade-offs are the main reasons false negatives persist, even after decades of AppSec progress.

- Reducing false negatives requires a comprehensive and layered strategy that blends static and dynamic analysis, real-world testing, and continuous tuning across integrated CI/CD pipelines. This approach ensures that vulnerabilities are identified, tested, and monitored throughout development and deployment.

Introduction

False negatives are one of the most dangerous problems in application security. They happen when scanners fail to detect real vulnerabilities, giving teams the impression that everything is secure. That gap exposes systems without anyone knowing there’s a problem until it’s too late. Even after decades of improvements in AppSec tooling, these misses create risk. So why does this keep happening, and what can teams do about it? In this article, we’ll explain how false negatives occur, why they persist, and what can realistically be done to reduce them.

What Are False Negatives in AppSec?

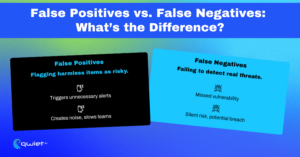

In application security, a false negative occurs when a tool scans your code, infrastructure, or system and reports that everything is safe when it’s not. It’s the opposite of a false positive, where the tool flags something as a problem even though it’s fine. With a false positive, you might waste time investigating a non-issue. With a false negative, you do not know that a real vulnerability is in your environment. False negatives are particularly dangerous because they give teams a false sense of security. If a scanner misses something critical, that vulnerability gets pushed forward in the pipeline, likely into production, with no alerts. The code passes review, no red flags are raised, and nobody is watching that door. Meanwhile, attackers who know how to exploit it can do so without resistance.

There are several technical reasons for false negatives. Many tools rely on static rules or signatures to identify risky code, and those can’t always account for real-world variation. Some miss vulnerabilities in dynamically typed languages, or only work well within specific frameworks. Others depend on heuristic models that struggle with complex or novel code patterns. When code deviates from expected behavior, the scanner might skip over it.

Environmental gaps also matter. Scanners often run in isolated test environments that don’t match real deployment conditions. They may lack access to runtime data, secrets, or infrastructure state. Add in complex code paths, conditional logic, third-party dependencies, and the increasing sophistication of attacker evasion tactics, and it becomes clear why even well-tuned tools can still miss something important.

What Is the Impact and Risk of False Negatives?

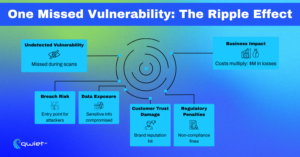

When a false negative occurs, a real vulnerability is missed and often goes unnoticed in production. This issue bypasses all the automated safeguards and gets deployed as if everything checked out. Once it’s live, the risk isn’t theoretical; it’s exposed and active, with no defenses because the system thinks it’s secure. This makes exploitation more likely and gives attackers more time to operate.

If no alerts are triggered, the vulnerability might remain open for days, weeks, or even longer if no alerts are triggered. That extended exposure increases the window for reconnaissance, privilege escalation, lateral movement, and data extraction. The longer the issue goes undetected, the more damage it can cause. This makes exploitation more likely and gives attackers more time to operate. If no alerts are triggered, the vulnerability might remain open for days, weeks, or even longer if no alerts are triggered. That extended exposure increases the window for reconnaissance, privilege escalation, lateral movement, and data extraction. The longer the issue goes undetected, the more damage it can cause.

False negatives can also lead to downstream problems beyond the original bug. Once exploited, these weaknesses can result in data leaks, infrastructure compromise, or full system breaches. Public exposure often leads to loss of customer trust, negative press, and significant financial fallout. In regulated industries, they can trigger compliance violations and penalties, mainly if teams rely on automated tools that falsely report everything as secure.

The impact isn’t isolated to systems; there’s also an internal effect on how teams operate. Security teams lose confidence in their tools, and developers question the value of checks that don’t surface the real problems. And in some cases, leadership may mistakenly assume the issues result from user error instead of detection failure. One missed vulnerability can multiply quickly, especially in distributed systems where code changes ripple through interconnected workloads. The fallout grows much faster than the original oversight.

Why Are We Still Struggling With False Negatives?

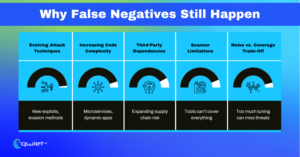

False negatives aren’t a new issue in application security. Teams have been aware of the problem for decades, and the tools to solve it have evolved significantly. However, despite the progress, false negatives still appear in every environment, from early-stage startups to mature enterprise pipelines. The question isn’t whether scanners are better than they used to be. They are. The problem is that the attack surface keeps shifting faster than the tools can fully adapt. Modern applications change constantly. Codebases evolve every day, often across multiple contributors and repositories. New frameworks, APIs, and cloud-native patterns introduce variables that weren’t even relevant a year ago.

Static rulesets and pattern-based scans have difficulty keeping up with that kind of movement, especially when the tool has to work across languages, build systems, and architectures. Third-party and open-source components add another layer of complexity. Today, most projects rely heavily on external packages, and those dependencies often have their risks. Vulnerabilities in these packages might not surface through standard static analysis, and some scanners don’t track indirect dependencies well. That leaves gaps in visibility, primarily when relying on older or less dynamic scanning tools. Scanner models themselves have limitations. Some tools prioritize speed, sacrificing depth. Others are tuned tightly to avoid overwhelming users with false positives, but that same tuning often causes them to miss edge cases.

There’s always a balance between detecting as much as possible and keeping the signal clean. Pushing too far in either direction creates blind spots or noise that does not help. That tension between ideal detection and practical usability keeps false negatives on the table. No tool can catch everything without creating trade-offs elsewhere. Even the most advanced platforms must choose what to scan, how deeply to scan it, and how much context they need to be accurate. So we’re not just dealing with a technology problem; we’re dealing with the limits of what any static or dynamic scanner can reliably deliver in a constantly changing environment.

How Can We Reduce False Negatives and Why Can’t We Fully Eliminate Them?

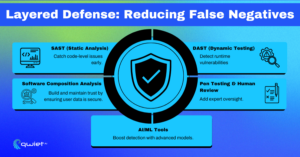

Reducing false negatives starts with layering multiple types of security tools. Static analysis tools can flag known coding flaws, dynamic testing can observe behavior during runtime, and software composition analysis can track vulnerable dependencies. No single tool sees the whole picture, but each has different strengths. When used together, they cover more ground and reduce the chances of something slipping through. Better CI/CD integration helps scanners operate with more context. Tools can deliver more accurate results when they can access complete configuration files, merged branches, build artifacts, and runtime variables. Many patterns that create false negatives stem from a lack of visibility into how the code is assembled and deployed. Integrating security checks earlier in the pipeline and giving them access to the real system state improves accuracy.

Scanners also need tuning over time. As applications change, so does the surface area that tools need to scan. Code that was safe last year might be risky because of dependency updates, new user inputs, or infrastructure changes. Treating security tools as static means accepting more false negatives. Ongoing tuning guided by factual findings and feedback helps keep detection relevant. Real-world testing methods like fuzzing and manual penetration testing still play an essential role. Automated tools are fast and scalable, but they tend to work off predefined rules or known patterns. Human testers and fuzzers introduce unpredictable behavior that mimics how actual attackers might probe the system, adding another layer of defense against missed issues.

No scanner will ever catch everything. Even the most advanced tools have blind spots due to unknown vulnerabilities, novel attack vectors, or complex code logic. AI and machine learning are starting to change how scanners find problems, but they also come with trade-offs like new types of false positives or gaps in explainability. The best results still come from combining strong tools, experienced people, and flexible processes. That blend is how teams can reduce the likelihood of false negatives, even if they can’t eliminate them.

Conclusion

False negatives continue to be a persistent and damaging issue in application security. No tool can detect every vulnerability, and the cost of a missed one can be high. Teams must combine automated tools, manual testing, and continuous refinement to reduce exposure. It’s not about what the tools are catching; it’s about staying sharp enough to ask what they might be missing.

Book a demo with Qwiet to see how we help reduce false negatives and improve security confidence across your pipeline.

FAQ

What is a false negative in application security?

A false negative in AppSec occurs when a scanner fails to detect a real vulnerability, incorrectly reporting that the code or configuration is safe when it’s not.

Why are false negatives dangerous in cybersecurity?

False negatives are dangerous because they give teams a false sense of security, allowing real vulnerabilities to reach production unnoticed and increasing the risk of exploitation.

How do false negatives happen in security scanning?

They result from limited scanner rules, incomplete environment replication, complex code paths, or attackers’ evasion techniques.

Can false negatives in AppSec be eliminated?

No. While they can be reduced with layered tools and continuous tuning, no security system can guarantee 100% detection due to evolving codebases and unknown attack vectors.

How can teams reduce false negatives in application security?

Use a multi-tool strategy that combines static and dynamic analysis, integrates security checks into CI/CD pipelines, and includes real-world testing like fuzzing and pen testing.