Key Takeaways

- AI-generated code is not inherently more or less secure than human-written code. The risks depend on how the code is reviewed, tested, and validated, not who or what wrote it.

- Security scanners treat both AI and human code the same way. They analyze syntax, structure, dependencies, and behaviors without considering the source of the code.

- The best results come from a harmonious blend of AI coding tools and human oversight. AI’s speed and pattern-matching capabilities, when coupled with human judgment and robust AppSec practices, lead to the creation of safer code. This underscores the irreplaceable role of human oversight in the code generation process, even in the era of AI.

Introduction

As AI-generated code becomes more common in day-to-day development, teams ask whether it’s fundamentally different from human-written code and whether security tools need to evolve. Do AppSec scanners need to treat AI output differently, or are the risks the same regardless of the author? In this article, we’ll cover how human and AI code differ in practice, what risks are unique (or not), how scanners interpret both, and why the focus should remain on validation, not origin. The core idea is simple: good code is good code, harmful code is destructive code, and both get scanned the same way.

What’s the Difference Between Human-Written and AI-Generated Code?

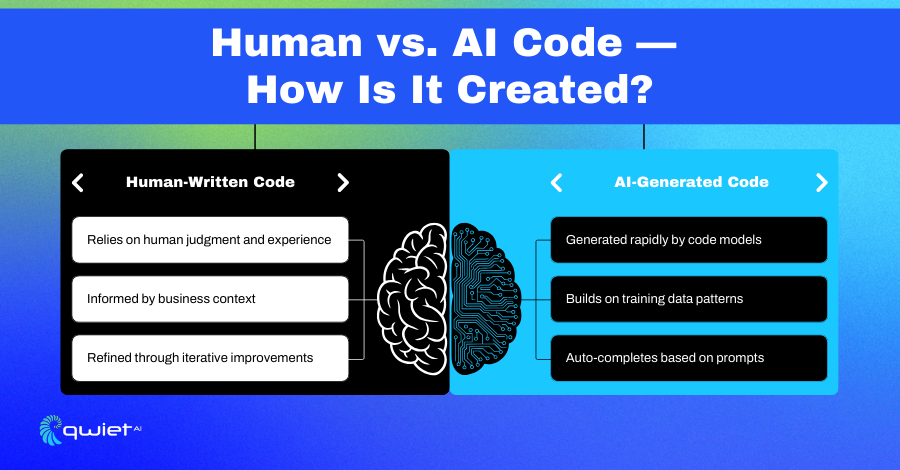

Human-written code is created through a hands-on, iterative process. Developers typically work with full knowledge of the project context, adjust based on feedback, and build solutions that align with architecture, business logic, and team conventions. Decisions are often made with a mix of experience, patterns, and judgment calls that reflect broader system behavior. AI-generated code is produced through prompt-based models that generate solutions based on training data. It’s often used for smaller, isolated tasks, such as a function, script, or code block to solve a specific prompt. The model doesn’t know the larger context of the project unless explicitly included, and even then, it may not fully interpret the surrounding intent or dependencies.

There are apparent differences in how the two are produced. AI can generate code quickly, sometimes at a scale that humans don’t match. It tends to repeat known patterns and, if not prompted carefully, may rely on outdated or insecure techniques pulled from its training data. It may also copy vulnerable code if that code was present during model training. Human-written code may be slower to produce, but it’s shaped by reasoning, real-time awareness, and structured review cycles. Despite these differences, the result is still the same code. Whether written by a person or machine-generated, the output must be tested, scanned, and validated.

The source of the code does not determine its vulnerability. How the code is reviewed and secured before it runs in production truly matters. This shared responsibility in code validation empowers every team member, whether human or AI, to contribute to the safety and reliability of the codebase.

What Risks Are Unique (or Not) to AI-Generated Code?

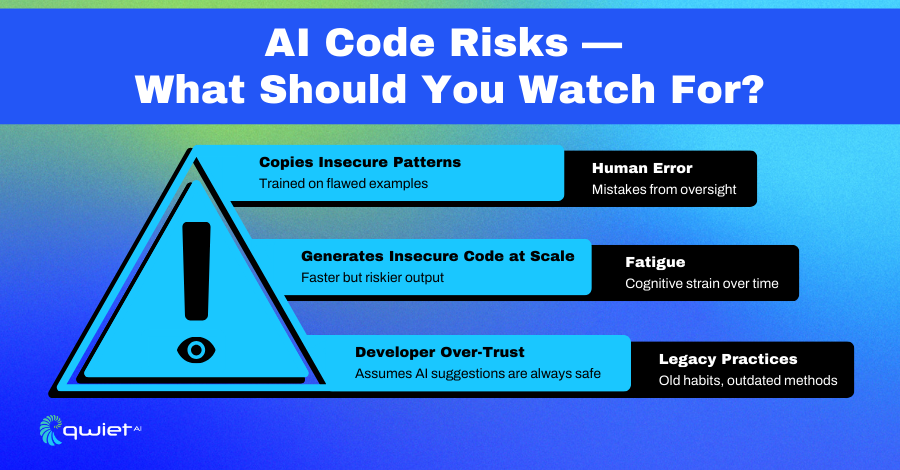

AI-generated code doesn’t automatically come with more vulnerabilities, but introduces risk differently. One of the more common issues is that AI can repeat insecure patterns it has seen during training. If the model was exposed to flawed or outdated examples, it may generate code replicating those mistakes without signaling anything is wrong. Scale is another factor in AI’s ability to quickly develop a large amount of code. Insecure code can be produced and copied faster than most teams can review. Without strong guardrails or oversight, that volume makes it easier for weak or unsafe logic to make it into pull requests or production branches.

There’s also the risk of over-trust. Developers may assume the code must be valid or optimized if the AI produces it. That mindset can lead to skipped reviews, unchecked assumptions, and code that merges without the same scrutiny applied to human-written submissions. Mistakes can occur without proper checks if the team treats the output as authoritative.

Human-written code carries risks; fatigue, time pressure, and inconsistent standards can lead to oversights. Long-standing habits or outdated practices might continue unchecked if the team isn’t actively reviewing them. These risks don’t come from malice or carelessness; they are just the realities of working at speed on complex systems. The bottom line is that risk isn’t tied to who or what writes the code. What matters is how the code is verified before it runs. Whether the source is a person or a model, all code needs the same level of scrutiny to be considered safe. Scanning, testing, and review practices still carry most weight when catching problems early.

How Do Security Scanners Treat AI vs. Human Code?

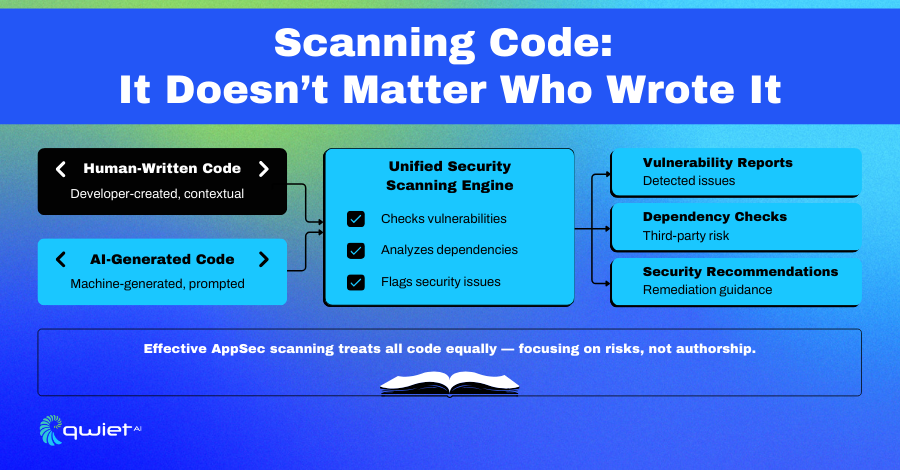

Security scanners do not discriminate based on the origin of the code. They treat all code equally by focusing on patterns, syntax, structure, dependencies, and behavior. Whether a function is typed by a developer or generated by a model, the scanner runs the same checks, recognizing and valuing the efforts of all contributors to the codebase. Vulnerability detection depends on what the code does, not how it was created. Insecure APIs, hardcoded secrets, exposed tokens, and unsafe logic follow recognizable patterns. These are what scanners are built to catch. Whether that code came from a senior engineer or a prompt to a language model, the risks look the same to the tool doing the analysis.

That said, there may be adjustments ahead. As AI-generated code becomes more common, some scanners may need to learn how to interpret common generation quirks better, such as repetitive structures, overly generic implementations, or missing edge-case handling. But those are refinements, not a complete rewrite of how scanning works. There’s a common belief that AI-generated code needs its category of tools. In reality, what’s required are accurate, well-maintained scanners with strong coverage and good context. The quality of the scan matters more than the origin of the code. Good tools can handle both without needing to be redesigned from scratch.

Which Is “Better” Human or AI Code?

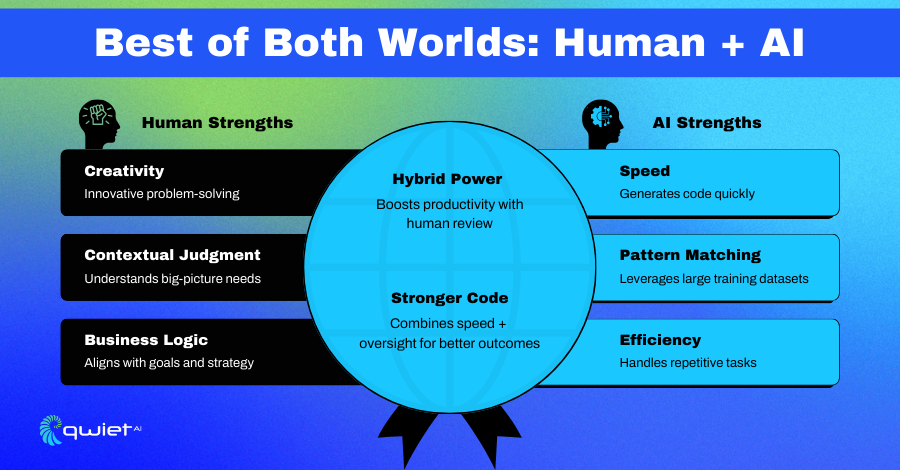

AI-generated code can be valid when speed is the priority. It generates boilerplate, fills in repetitive logic, and surfaces common patterns quickly. In many cases, it can help move a task forward faster than someone working from scratch. It also avoids certain human habits, like inconsistent naming or skipped structure. Human-written code brings different strengths. Developers bring context, business logic, and experience that AI doesn’t understand.

Decisions get shaped by architecture, prior discussions, and long-term goals. That judgment is hard to replicate, mainly when the code affects user experience, system integration, or performance trade-offs. In practice, most teams see the best results when both are used together. AI tools can accelerate output and reduce repetitive tasks, while human developers ensure the output fits the project’s needs. That combination only works when there’s a review process in place to keep quality high.

Security doesn’t care where the code came from. Vulnerabilities follow the same patterns, whether generated or written by hand. Both need scanning, testing, and validation before they’re pushed forward. Skipping that step, in either case, opens the door to risk.

The better code is the one that’s been reviewed and secured. Whether it starts with a human or a model, the outcome only matters once it’s been checked, tested, and verified to hold up under real-world use. That’s where confidence in the result comes from.

Conclusion

It doesn’t matter whether code is written by a developer or generated by an AI model; vulnerabilities can appear either way. What matters is how thoroughly that code is reviewed, tested, and secured before it reaches production. The most effective teams don’t debate who wrote the code; they build strong pipelines that catch issues early and reliably. Book a demo with Qwiet to see how we help bring that standard into your workflow.

FAQs

Is AI-generated code more vulnerable than human-written code?

No. AI-generated code is not inherently more vulnerable, but it can introduce risk quickly and at scale if not properly reviewed and tested.

Do security scanners treat AI-generated code differently?

No. Scanners evaluate code based on behavior, structure, and patterns regardless of whether it was written by a human or generated by AI.

Should AI-generated code go through security scans?

Yes. Before production, all AI-generated code should be scanned for vulnerabilities, misconfigurations, and dependency risks.

What are the main risks of using AI to generate code?

AI can replicate insecure patterns from training data, produce large volumes of unchecked code, and lead developers to over-trust the output without proper review.

What’s the best way to secure AI-generated code?

Integrate security scanning into your CI/CD pipeline, combine AI tools with human code reviews, and treat AI output with the same rigor as human-written code.