Shortcomings of static program analysis in practice

Creating programs that analyze other programs is a fascinating idea in itself. It hurts me to say that static code analysis has a remarkably bad reputation among practitioners. If you have performed security assessments and used these tools, you may agree that pinpointing the concrete shortcomings of these tools is not easy; you are unsure whether they increase or decrease your productivity. It seems to me that the following problems are most pronounced at the moment.

- Lack of adaptability. Static analysis tools operate as black boxes that eat code on the one side and produce lists of findings on the other. As an analyst, you understand that this type of automation may be easy to buy and sell, but it is not helpful to you in practice. Neither does it allow you to provide the knowledge you already have about an application to make the analysis more effective, nor does it expose the powerful static analysis algorithms to you to ask specific questions about the code base. You cannot ask the tool, and the tool cannot ask you.

- Painful generality. The tools are painfully generic and hard to adapt to specifics of the code you are analyzing. For some reason, the tools never really handle the frameworks and libraries you are dealing with in your particular application, and so you find yourself operating a check list that feels vaguely related to your code at best, and there is no good way to guide the analysis by the knowledge you have about the program.

- Lack of contextual understanding. The tools do not take context into account. Most tools go as far as checking for common anti-patterns related to widely used libraries, however, what is equally important is to understand how the program communicates with the outside world in the first place, and where the attacker actually sits.

- Fire and forget. After running the tool, you can run it again in the future, but the knowledge you have acquired in the current session is mostly lost. Next time you run it, you will start to wade through the same type of false-positives again.

These are symptoms of the same problems — the communication just doesn’t work: between you and the static analyzer, and you and the next person who will run it, or the person who has gone through this exercise before you. Moreover, there is no way to create a knowledge base for code analysis that evolves as the code evolves.

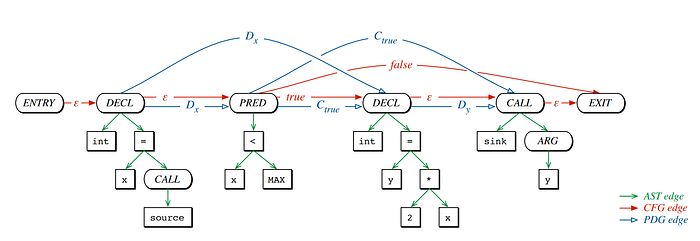

Background: Code Property Graphs

Before we delve into these new concepts, let me briefly talk about code property graphs (CPG). The code property graph is a concept based on a simple observation: there are many different graph representations of code, and patterns in code can often be expressed as patterns in these graphs. While these graph representations all represent the same code, some properties may be easier to express in one representation over another. So, why not merge representations to gain their joint power, and, while we are at it, express the resulting representation as a property graph, the native storage format of graph databases, enabling us to express patterns via graph-database queries.

This original idea was published by Nico Golde, Daniel Arp, Konrad Rieck, and myself at Security and Privacy in 2014 [1], and extended for inter-procedural analysis one year later [2]. The definition of the code property graph is very liberal, asking only for certain structures to be merged, while leaving the graph schema open. It is a presentation of a concept, data structure, along with basic algorithms for querying to uncover flaws in programs. It is assumed that somehow someone creates this graph for the target programming language. In consequence, concrete implementations of code property graphs differ substantially.

Semantic Code Property Graphs

With the semantic code property graph we created at Qwiet AI, we add detail to the original definition of the code property graph to specify exactly how program semantics are integrated into the graph. This provides the bases for language-neutral analysis via CPGs, and at the same time, increases precision and scalability. To achieve this, we build on two well understood concepts from compiler construction and the analysis of machine code : intermediate languages and function summaries.

Language-neutral analysis via Intermediate languages

Looking at the way compilers implement optimization algorithms, you will see that they do not deal directly with the source code language or the resulting machine code. Instead, they operate on an intermediate language that may contain language-dependent or platform-dependent instructions, but are mostly language-neutral. It thus becomes possible to implement transformations based on the intermediate language, once, and for all source languages, simply by having them operate on the intermediate language as opposed to the source language directly. On the other end of the compilation pipeline, reverse engineers take machine code and transform it back into intermediate languages for the same purpose: to obtain platform-independent analysis (see REIL [3]).

So, why not create code property graphs based on that idea, that is, code property graphs over an intermediate language specifically designed for code querying, a language that focuses on program semantics and abstracts away from the source language. The semantic code property graph is our implementation of this idea.

We constructed the semantic code property graph by iteratively defining the basic elements of an intermediate language. Where it differs from typical intermediate languages found in compilers is that it preserves high-level information wherever that information is useful for querying, e.g., for us, it is important to preserve variables names, and it does make a difference whether we are dealing with an array access or access to a structure.

Providing semantics via function summaries

In addition to focusing on program semantics in the design of the intermediate language, we provide a mechanism to easily integrate semantics of language-specific operations as well as semantics for interfacing with the outside world via libraries. We achieve this by representing all operations of programs, both built-in operators and calls into libraries as evaluations of functions. We can then introduce semantics for operations simply by describing for each operator how its arguments affect each other, and how they affect the function return value.

Using function summaries to describe the effects of function calls and external libraries is well understood, going as far back as the seminal work by Horwitz et al., where function summaries are used to increase efficiency of interprocedural slicing (see [4]). In that work, it becomes obvious that function summaries can be easily augmented onto graph-based program representations, and so porting it to the code property graph is not problematic.

The concept has popped up here and there [see 5,6], and the message is simple: to adapt data-flow tracking to the libraries and frameworks actually used by an application, to stop being painfully generic, we need a mechanism such as function summaries. The semantic code property graph combines the idea of function summaries with language-neutrality. Not only does it integrate information about external libraries via function summaries, but it also allows all operations of the programming language to be modeled via function summaries, as these are represented as function calls as well.

Security Profiles and the shape of programs

The enhancements we have made to the code property graph provide a language-independent approach that is aware of libraries and frameworks. Building on this graph, let us go back to the remaining problems of static analysis outlined previously — the lack of communication & way to preserve continuously evolving contextual knowledge. The harsh truth is, we cannot identify a flaw in a program unless we know where the attacker sits. We need to know how the program interfaces with the outside world, which data it can expect to be trusted and which not. Unless we do that, the findings we produce will be considered false-positives.

The Security profiles is the way we concentrate this information and allow it to be communicated between actors in the software development process. It provides an overview of an application’s role, that is, the way it communicates with the outside world, flows between these communication end-points, filtering of data, security-relevant properties of code that are relevant to understand the security of the code base in general. As opposed to attempting to point to specific bugs, the security profile exposes the program’s role and shape. It provides information on what a program does, how it communicates, without judging. Based on the security profile, actors can provide the communication policies that make sense for their platform, and enforce them, but not before seeing uncensored but summarized information of what their program does.

The security profile not only provides insights into the code, but also becomes the primary store for information added externally, by any of the actors in the development process. If a finding is known to be a false-positive, the profile will contain this information, and not take it into account upon regeneration. We no longer fire-and-forget, and we no longer perform an analysis without taking into account information the analyst is eager to provide.

We are currently eagerly working on the details of the security profile, and I am very much looking forward to sharing a whitepaper on the technical details with you soon.

References

(This blog originally appeared on the Shiftleft domain)