Key Takeaways

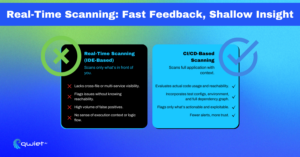

- While promising immediate feedback, real-time scanning often creates ‘noise’ without context. This ‘noise’ refers to the excessive and irrelevant alerts that tools running in the IDE or pre-save phase can generate. These tools may flag unreachable or non-exploitable code, leading to alert fatigue and dev pushback.

- CI/CD scanning, with its promise of higher accuracy and better fixes, can bring a sense of relief to developers: Scanning post-commit provides complete visibility into the codebase, data flows, and app structure, enabling more thoughtful prioritization and safer auto-fixing. This reassurance can help developers feel more at ease with the security process.

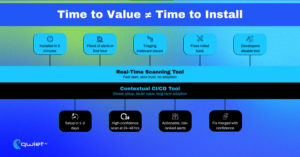

- Fast setup doesn’t equal long-term value: Tools that start quickly often require more tuning and suppression later. What matters is how well a tool fits into real workflows and drives trusted results over time.

Introduction

Real-time scanning and autofix features often promise faster feedback and shorter setup time, but that speed usually comes at the cost of accuracy and depth. Tools that scan in the IDE or pre-commit stage rarely have the full context to surface meaningful issues. Without visibility into the broader application, they generate alerts that don’t reflect actual risk. “Time to value” isn’t about how quickly a tool installs. It’s about how quickly it produces results you can trust without creating noise or slowing down development. This article breaks down the limits of real-time scanning, the downsides of shallow autofix, and why integrating security into CI/CD pipelines provides more meaningful outcomes.

Real-Time Scanning Sounds Great Until It Hits Reality

Real-time and pre-save scanning tools promise immediate feedback by scanning code as developers write it. The idea sounds useful: Get alerts in the IDE or on save and fix issues before the code hits a branch. But when applied to security, this model doesn’t hold up. These tools usually work off incomplete context. They can’t see how the code fits into the whole application, can’t follow inputs across services, and aren’t aware of how things behave outside the file currently being edited.

Without complete visibility, they tend to flag issues that aren’t exploitable. Something as simple as an eval() statement in a test utility can trigger an alert, even if it’s never called or shipped. They can’t tell whether the flagged code is behind an auth layer, unused in production, or part of an unreachable legacy path. This leads to noisy findings that interrupt workflows without adding real value. That kind of noise builds up quickly. When you get alerts that don’t reflect actual risk, trust in the tool starts to drop. Developers tune it out, alerts pile up, and the tool becomes something people click past rather than rely on. These tools are often great at catching style issues or basic code hygiene violations, but for deep application security, they tend to over-promise and under-deliver.

Autofixing in Real-Time? Not Without Context

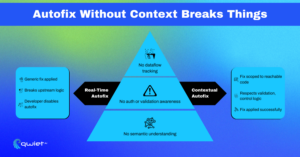

Real-time autofixes can look helpful on the surface, but the fixes they suggest are usually based on pattern-matching, and there is no actual understanding of how the application works. Most of the time, these tools apply a static rule, “sanitize this,” “escape that,” and “replace this method with a safer one,” without knowing what the code is doing in context. That might work for some surface-level problems, but these suggestions start falling apart once business logic or user-specific behavior is involved. Without visibility into where the data comes from, how it moves through the app, or what permissions should be in place, auto-fix suggestions quickly become unreliable. These tools don’t understand which inputs are already validated upstream or how a helper function might encapsulate access control. So when they recommend a fix, they might be patching something that isn’t vulnerable or, worse, introducing new problems into production code.

This leads to issues that are harder to clean up than the original alert. Fixes can break functionality, trigger side effects, or interfere with other logic. Teams often have to roll back these changes, manually rewrite the affected code, and waste time triaging something that should never have been flagged. It’s not that auto-fix can’t be helpful; it can, but the value only shows up when the tool understands what the code is doing, not just what it looks like. A good fix depends on knowing the purpose behind the code. For example, if a route is tenant-scoped, neutralizing the input is not enough; you need to understand what validation is already in place and whether the fix preserves the access model. If that part is missing, the fix might look clean, but it won’t be safe.

CI/CD Integration: The Real Sweet Spot for Time to Value

Security scanning inside the CI/CD pipeline gives you a clearer picture of what’s happening in your code. Instead of scanning isolated files or one-off snippets, the tool can analyze the entire codebase in context, dependencies, environment variables, middleware, data handling layers, and more. This is where deeper insights and findings reflect actual risk rather than surface-level code patterns. When you scan in CI/CD, the tool has access to everything it needs to evaluate reachability. It can tell if user input flows into a vulnerable function or if middleware, permissions, or data type constraints gate that path. This lets you focus on exploitable vulnerabilities, not just theoretically possible. That context also opens the door to batch-level autofixes tied to code that matters, such as code being used, tested, and deployed.

The pipeline also introduces other dimensions that improve scan quality. You get complete visibility into dependency trees, internal service relationships, and even version control diffs. This makes it easier to focus scanning on what’s changed, deployed, and touched in a pull request. It reduces noise and makes aligning findings with business risk or engineering priorities easier. The result is fewer false positives, better-quality findings, and less disruption to dev workflows. Developers don’t need to wade through noise. They get context-aware alerts tied to absolute paths in the code, and security teams get a signal they can trust. It’s not just that CI/CD scanning catches more; it produces results people are more likely to act on.

The Real Cost of “Fast” Setup Is Slower Dev Teams

It’s easy to be impressed by a tool that installs quickly and starts flagging issues within minutes. But speed at installation doesn’t always translate to value in practice. When tools start firing off alerts without context, they generate more noise than insight. That noise shows up as dozens or hundreds of issues that may not be relevant or exploitable, and that’s where things start to break down. When developers are flooded with alerts, especially ones that don’t reflect real risk, trust in the tool drops quickly. The issues pile up in the backlog, or they get ignored entirely. Teams stop triaging them, and security becomes something people work around instead of with. At that point, even valid findings are harder to act on because the signal is buried under too much irrelevant data.

The net result is friction in the engineering workflow. Pull requests slow down, security tickets stay open longer, and developers spend more time tuning or suppressing alerts than fixing anything. What looked like a fast setup turned into ongoing maintenance overhead, with security teams managing tool fatigue instead of improving coverage. This pattern shows up a lot. A team rolls out a scanner or IDE plugin that initially seems helpful until it starts flagging low-severity issues on nearly every file save. Within days, the team is overwhelmed. Within weeks, it’s disabled. Fast doesn’t matter if it doesn’t scale with how people build and ship code.

Conclusion

Speed means nothing if the findings aren’t relevant. Tools that scan without context generate false positives, introduce friction, and erode trust. Scanning in CI/CD, with full access to code, dependencies, and usage patterns, leads to better prioritization and cleaner fixes. If a tool slows teams down with noise, even if it starts fast, it’s not delivering value. The proper setup gives developers accurate, context-aware feedback tied to actual behavior, not assumptions. That’s what moves the needle. Want to see how Qwiet delivers accurate, context-driven results without flooding your devs with noise? Book a demo and examine how behavior-based scanning fits your CI/CD pipeline cleanly.

FAQ

What is the downside of real-time security scanning in development?

Real-time security scanning often lacks context about how code is used across services or modules. It operates on incomplete information, leading to false positives and noisy alerts. These tools typically don’t account for reachability, authentication layers, or business logic, which limits their ability to surface meaningful vulnerabilities.

Why is CI/CD the best place for application security scanning?

CI/CD environments provide the whole codebase, configuration, and dependency graph, allowing security tools to analyze application behavior in context. Scanning at this stage makes identifying reachable vulnerabilities easier and prioritizing issues based on what’s deployed or touched in a pull request.

Are real-time auto-fix suggestions reliable in secure coding?

Most real-time autofix tools use rule-based suggestions that do not understand data flow or business logic. Without proper context, these fixes can introduce regressions, break functionality, or patch the wrong issue. Reliable remediation requires understanding how inputs are validated and how permissions are enforced across the app.

What does “time to value” mean in application security?

In AppSec, “time to value” refers to how quickly a tool produces actionable, high-confidence findings without disrupting developer workflows. It’s not about how fast a tool installs but how well it integrates with CI/CD pipelines, reduces false positives, and aligns with how developers ship code.