Key Takeaways

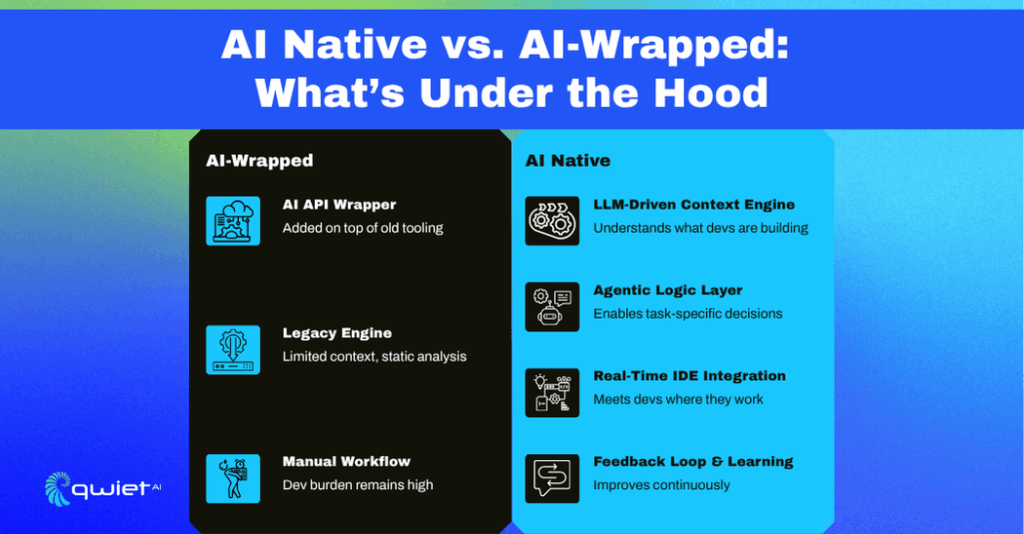

- AI Native means built, not bolted. It’s the difference between a platform that embeds AI into its architecture and one that adds it later as a feature. Only the former can deliver meaningful context, automation, and integration across the SDLC.

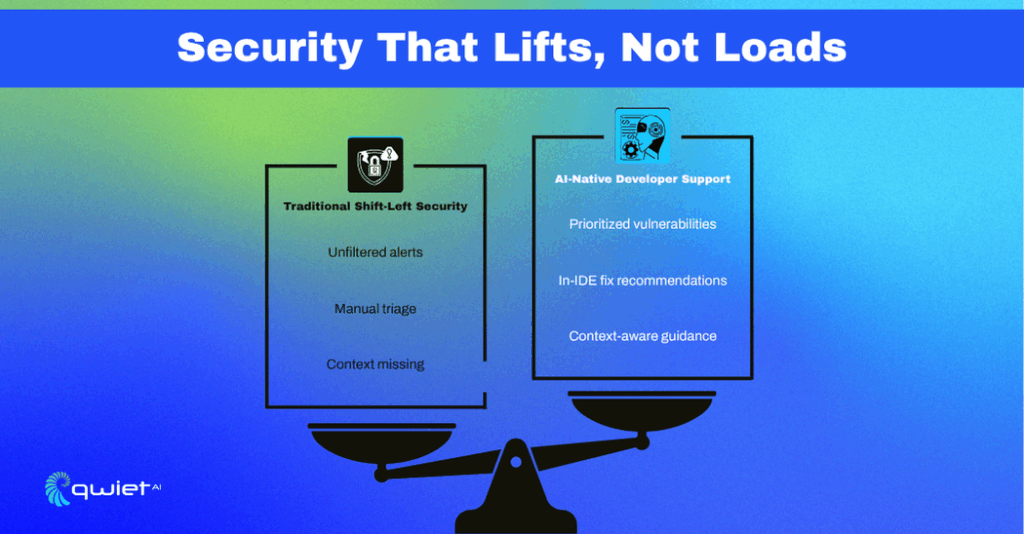

- Developers need signal, not noise. AI-native tools like Qwiet prioritize relevance, context, and timing, relieving alert fatigue. This enables developers to focus on real risks without interrupting their workflow, reducing the overwhelming feeling of dealing with a flood of alerts.

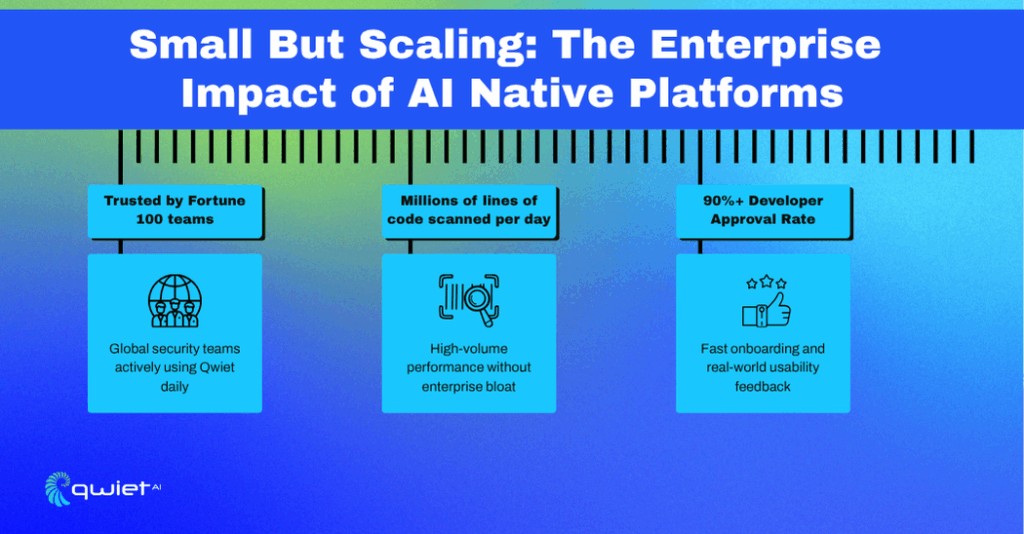

- Enterprise scale is earned through performance, not company size. We are pitching potential; Quiet has already deployed at scale, in complex enterprise environments, delivering consistent results under load. This proven performance at scale should reassure you of its effectiveness.

AI in AppSec isn’t on the horizon; it’s already reshaping how teams evaluate and adopt tools. But with that shift comes noise. Every vendor claims AI powers them, yet few explain what that means. Buzzwords are everywhere, making it harder for buyers to understand what’s real, what’s inflated, and what will help their teams. This article explains what it means to be “AI Native,” why that distinction matters for developers, and how performance, not company size, should guide enterprise decisions.

The Real Meaning of “AI Native”

Built with AI in its Bones, Not Bolted On

AI native isn’t a marketing term; it’s an architectural decision. This means that the platform was written to operate with AI as part of every layer. It is not just a model plugged into a pipeline but an AI system that can continuously understand, act, and improve without being manually triggered. This architectural design changes how it integrates with the development workflow.

In contrast, retrofitted tools tend to treat AI as an add-on. They wrap old scan engines with generative outputs or classification models. But the internal mechanics stay the same. You still get long, static lists of results with little understanding of which issues matter or how they relate to what’s changing in your codebase.

Why Context and Autonomy Matter

With an AI-native platform, signals don’t just flow into a model and wait for a label. They’re evaluated in context across syntax, control flow, data flow, dependencies, and execution paths. That means the system can differentiate between reachable risk and noise. It doesn’t flag something because it looks dangerous in isolation; it checks how and whether it’s being used.

Qwiet was built to run like that. It embeds large language models and autonomous agents across the SDLC, not just at scan time. Findings aren’t just dumped into a queue; they’re ranked, grouped, correlated, and explained based on real-world behavior. The system looks at your code like you do: not just what’s written, but how it behaves in the larger structure.

Being AI native allows the platform to support decisions, not just document problems. It enables the system to integrate seamlessly with how modern teams ship code quickly, iteratively, and with high context awareness.

Empowering Developers, Not Overwhelming Them

Security That Works With Devs, Not Against Them

“Shift left” is a concept that promises earlier feedback in the software development process. However, in many organizations, implementing shift-left leaves developers inundated with alerts. Pushing security earlier without filtering or context didn’t make teams more secure; it just moved the noise closer to the code. That’s not helpful when trying to ship something on a deadline.

Security tools that drop unranked findings into pipelines or tickets without context slow teams down. Developers often get stuck trying to determine if an alert is relevant, how to reproduce it, or whether it even applies to the change they’re making. That gap between detection and action creates drag across the release process.

Context-Aware Feedback Developers Can Use

AI-native security platforms have an advantage here. They don’t rely solely on static rules; they evaluate how the code behaves, what paths are reachable, and how data flows through the application. That level of context lets them surface fewer, more relevant findings that developers can act on.

Qwiet’s system filters out noise by analyzing code structure, usage, and relationships in real time. It can prioritize findings based on risk, clearly explain their impact, and link them to the relevant part of the code that requires attention. That reduces time spent triaging and helps teams stay focused.

The feedback doesn’t come as a separate report; it’s built into the workflow days later. Developers get meaningful security input while the context is still fresh. That makes fixing issues before they ship easier, without adding friction. Security tools are most effective when they respect developer time. That means showing fewer alerts, providing better context, and smoothly integrating software into how it is built.

Addressing Industry FUD Around AI

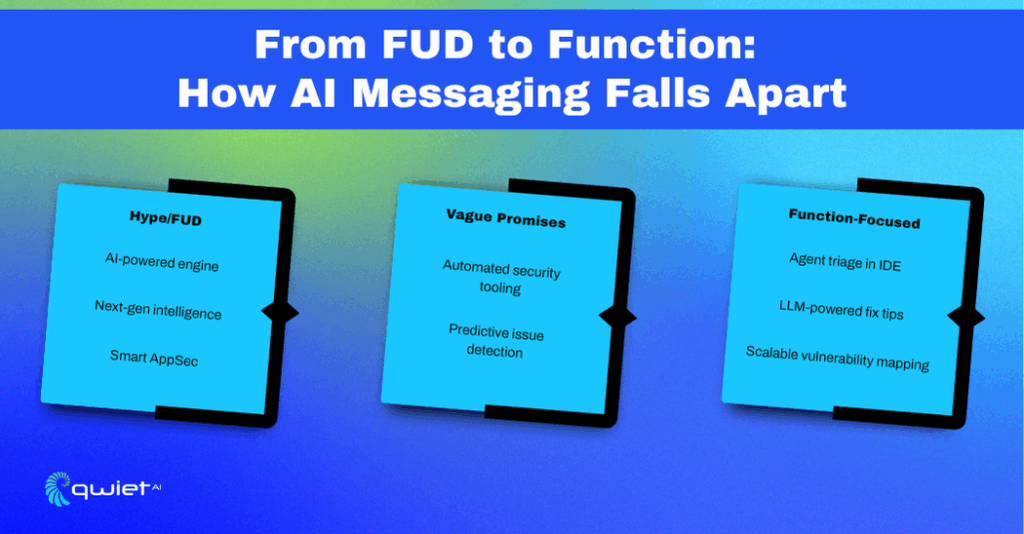

FUD vs. Function: Cutting Through the AI Hype

Every vendor now claims to use AI. But most of the time, there’s no detail, no architecture, and no explanation of what the models do, how they’re integrated, or what changes for the user. This makes it difficult for teams to distinguish between marketing and actual capability. This is a classic example of FUD in the industry- Fear, Uncertainty, and Doubt.

When AI becomes a buzzword without substance, it creates more confusion than value. Expectations get inflated, but delivery rarely matches. That gap doesn’t just waste time; it damages trust. In security, trust is earned through clarity and repeatable outcomes, not grand promises.

How We Approach Transparency

We don’t treat AI like a black box; we’re transparent about utilizing it, whether it involves analyzing control flow, supporting agent-driven decisions, or suggesting secure fixes. It’s all explainable, and it’s all designed to help real development workflows. If something’s surfaced, there’s a reason behind it, and you can trace that logic.

That level of transparency helps teams evaluate us: no guessing games, no vague demos. We want technical buyers to see how we apply AI, how it fits into their environment, and how it enhances day-to-day work, not just check boxes on an audit.

The bottom line is: we built this platform to deliver results, not run on hype. If we say AI is doing something, we’ll show you where, how, and why. That’s the standard we hold ourselves to.

Validating the Model AI Native at Enterprise Scale

Not Just Ready, Already Running at Scale

We’ve heard the sales objections before: “You’re too small to handle what we need.” That’s fair skepticism. But we’re not in the proof-of-concept stage. Our platform is already deployed in some of the world’s largest organizations, scanning millions of lines of code daily in production environments.

We’re handling deeply integrated CI/CD workflows, multi-repo monoliths, and fast-moving microservices under real-world load. That includes large, distributed engineering teams running multiple deployments a day. We’ve had to meet tight Service Level Agreements (SLAs), integrate into secure environments, and maintain consistent performance under pressure.

Scale Comes From 3Design, Not Headcount.

We built our platform to operate under stress without breaking context. That means our analysis engine can process code at speed, maintain structural relationships across files and services, and return accurate and prioritized findings. We don’t dump raw outputs; we correlate data, evaluate reachability, and connect issues back to the specific code paths that matter.

That’s what allows us to support enterprise-scale usage without adding operational friction. You get visibility across massive projects without needing an entire team to manage the tool. Findings remain precise. Context stays intact. Updates to the system don’t interrupt your workflow.

What makes us ready isn’t company size, it’s how the system performs under actual load. The people using us aren’t scaling down expectations. They’re shipping real code, with real deadlines, and they’ve made us part of that loop.

We’re not interested in selling a vision. We’re focused on delivering the performance that developers and security teams can rely on today and scaling with them as their environments evolve.

Conclusion

AI native isn’t a label we apply; it’s how we’ve built everything. We’re not trying to win mindshare with vague claims or inflated promises. We focus on delivering systems that integrate seamlessly into real-world development workflows, scale efficiently, and produce value that teams can see immediately. We’re here to support the people writing and shipping code, not distract them. That’s how we prove what we’ve built works. Want to see how an AI-native platform performs? We’d rather show than tell. If you’re interested in how this works in real-world pipelines, let’s talk no fluff, just real code, context, and results.

FAQs

What does AI native mean in AppSec?

AI native in AppSec means the security platform was designed to operate with artificial intelligence embedded in its architecture, not as a plugin or later addition. It supports real-time analysis, contextual insights, and autonomous workflows.

How is AI-washing affecting the AppSec industry?

AI-washing creates confusion by flooding the market with vague claims about AI without technical transparency. This makes it harder for security teams to evaluate what tools deliver value and which are just using AI as a buzzword.

How does Qwiet AI differ from legacy AppSec tools?

Qwiet AI uses a fully AI-native architecture. It analyzes code contextually, prioritizes risks based on real-world behavior, and integrates seamlessly into existing development workflows. Legacy tools typically bolt AI onto older engines, struggling to provide accurate and timely insights.

Why is context important in application security?

Context helps separate real threats from noise; without it, tools overwhelm developers with alerts that may not matter. Context-aware platforms can analyze code paths, data flow, and execution logic to flag only what’s relevant and actionable.

Can smaller vendors handle enterprise-scale AppSec needs?

When scale is baked into the design, Qwiet is already operating inside Fortune 100 environments, handling large codebases and CI/CD pipelines without slowing development or requiring massive operational overhead.