Key Takeaways

- All-in-one platforms trade depth for surface-level coverage: Bundling SAST, DAST, IAST, RAST, and ASPM into a single tool often leads to overlap in low-risk areas (e.g., basic code vulnerabilities) and blind spots in high-risk ones (e.g., complex business logic vulnerabilities).

- Context-aware tools, which understand an application’s specific context, outperform general-purpose scanners: These tools can interpret an application’s unique behavior, logic, and structure, providing more accurate and actionable security insights.

Real insight comes from tools that understand behavior, logic, and application structure, not just syntax or endpoint exposure. This understanding empowers security teams with the knowledge they need to secure their applications effectively. - Specialization matters more than feature count: Purpose-built tools that delve deep into specific application stack layers provide higher-quality findings and stronger security outcomes. This emphasis on specialization over feature count can inspire confidence in the security teams, assuring them that the right tools, not just the most, can lead to better security outcomes.

Introduction

With their deep understanding of the tradeoffs between different tools, security teams are constantly presented with platforms that promise to handle everything under one roof: SAST, DAST, IAST, RAST, and ASPM. The idea sounds good: fewer tools, more coverage. However, the result is often surface-level scanning across multiple areas without the depth needed to catch real issues. When a tool tries to do everything, it usually misses the vulnerabilities that matter, especially the ones tied to behavior, business logic, or application-specific context. This article breaks down where each tool does well, where it falls short, and why trying to combine them into a single “A-player” often leads to false confidence.

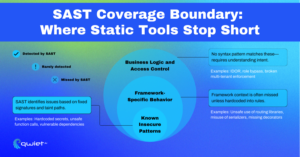

SAST: Best at Early Detection, Worst at Application Context

Strengths:

SAST works well to catch low-effort, high-signal issues early in development. It’s easy to wire into CI/CD pipelines, pre-commit hooks, and IDEs so teams can catch problems before the code leaves the developer’s laptop. Things like hardcoded credentials, use of known-dangerous functions (eval, exec, etc.), and dependency issues are the vulnerabilities SAST is built to find.

Because SAST analyzes source code statically, the app doesn’t need to run. That makes it fast and can operate on a pull request without a complete deployment pipeline. This makes it ideal for shift-left workflows that aim to catch obvious, pattern-matchable issues before they make it into production. It’s also great when you want lightweight checks that don’t introduce runtime performance overhead.

Limitations:

SAST consistently fails to understand how code behaves once it’s executed. It can’t reason across service boundaries, follow dynamic control flow, or evaluate logic that depends on real application state. If you’re building multi-tenant systems, API-driven apps, or anything with complex permission layers, SAST often won’t flag the gaps that matter. For example, it won’t tell you if a user from Tenant A can access data belonging to Tenant B because that issue isn’t tied to a dangerous sink but to missing logic.

You’ll also hit a ceiling with taint tracking in most static tools. They often raise alarms on non-reachable or exploitable code paths without full context. So, while you’re getting alerts, they don’t always point to real security problems, and that eats up time. And because these tools operate on syntax, not behavior, they can’t distinguish between a real vulnerability and a harmless pattern that looks risky out of context. That leads to noise, and when you’re drowning in false positives, teams start ignoring the scanner altogether.

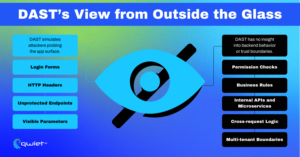

DAST: Best at Runtime Black-Box Testing, Worst at Internal Logic

Strengths:

DAST helps test an application like an external attacker would. It doesn’t rely on access to source code, so it can run against staging or production-like environments and scan live endpoints for vulnerabilities. It’s particularly good at identifying issues like missing authentication, weak input sanitization, and server misconfigurations, which often only appear once the app is deployed and running.

One benefit is that DAST interacts with the application in real-time. It sends requests, observes responses, and looks for behaviors that expose weaknesses. It’s effective at surfacing specific bugs that static tools miss, like reflected inputs in HTTP responses or error leakage from improperly handled exceptions. For public-facing surfaces, it gives you quick feedback on where you’re exposed.

Limitations:

DAST falls short when issues are buried inside internal logic, especially things that don’t have an external interface. It can’t test permissions embedded in service-to-service calls, business logic within background jobs, or data access paths that require authenticated state to reach. Internal APIs, GraphQL resolvers, CRON tasks, or anything behind the UI are usually invisible unless you manually script interaction or mimic internal flows, which most DAST setups don’t cover.

Because DAST has no visibility into the codebase, it also can’t differentiate between legitimate and unintended flows. It doesn’t know which data paths are supposed to be public and which are guarded by application logic. DAST won’t know if a permission check is missing or broken deep in the stack; it only sees the outcome, not the structure behind it. Without context around how data is supposed to flow, these tools are not uncommon to miss access control failures or misuse internal APIs entirely.

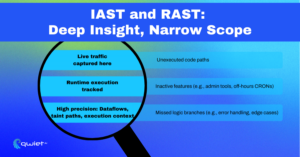

IAST/RAST: Best at Runtime Tracking, Worst at Coverage and Scalability

Strengths

IAST and RAST tools have a strong advantage in observing how code behaves during execution. Since they hook into the running application, they can track real data flows and flag vulnerabilities based on what’s happening in the live environment. This gives them better accuracy than traditional static or black-box tools. When configured properly, they generate fewer false positives because they only flag issues that occur in real time under real conditions.

Limitations

The tradeoff is that they only see what gets executed. A function, route, or permission check that isn’t triggered during a test session won’t be analyzed, making coverage dependent on test depth and traffic patterns. They also require staging or QA environments that closely match production, which can be hard to maintain. Deploying and tuning these tools often involves extra overhead instrumentation, performance profiling, and agent configuration, and that complexity increases as applications scale or evolve.

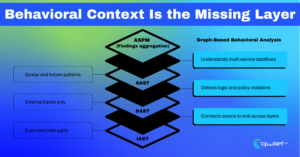

ASPM: Useful for Visibility, Not a Silver Bullet

Strengths:

ASPM platforms are helpful when dealing with multiple scanning tools across different stages of the SDLC, and a centralized view is needed to make sense of it all. They consolidate outputs from SAST, DAST, IAST, and CSPM into a single place, normalize the findings, and let you correlate risk across code, cloud, and runtime. For large organizations with many teams and pipelines, that kind of visibility helps reduce blind spots in reporting. It makes spotting duplication, coverage gaps, or inconsistent severity mapping easier. You also get some value in tying issues to systems of record, like assets, projects, owners, or business units, which helps prioritize.

Limitations:

ASPM doesn’t analyze code or behavior, scan anything independently, or depend entirely on the connected tools’ messages. Suppose a scanner misses a privilege escalation path, a broken access control condition, or an internal API exposure. In that case, ASPM has no way of knowing. It simply reflects the absence of data. It also doesn’t validate reachability, confirm exploitability, or explain the app’s structure. So, if the tools feeding into it generate false positives or surface low-fidelity issues, you’re organizing noise. You’re not improving detection coverage or getting more profound insight into the application’s behavior.

Bottom Line:

It’s useful for orchestration and reporting, especially in environments with multiple scanners and teams. However, it doesn’t close detection gaps or replace the need for tools that understand application logic, execution paths, or business rules. If your upstream tools miss something important, ASPM will, too, but it won’t tell you it’s missing. You still need behavioral analysis and context-aware detection upstream if you want meaningful visibility downstream.

The Problem with “All-in-One” AppSec Platforms

Platforms that try to bundle SAST, DAST, IAST, and RAST into a single offering tend to spread themselves too thin. They cover the basics, usually the easy stuff like input validation or outdated libraries, but fall short on more complex, higher-impact issues. These tools often look like they’re doing a lot on paper. Still, once you dig into the findings, you’ll notice repeated alerts across tools for the same low-risk problems and very little visibility into deeper application behavior. The overlap looks like coverage, but doesn’t translate into better detection.

Under the hood, these platforms usually rely on static scans that don’t account for data flow, execution context, or how logic plays out across services. Their DAST modules scan public routes without simulating real-world abuse scenarios or chaining attack vectors. Their instrumentation is usually shallow; it doesn’t model how authorization decisions are made or internal APIs are structured. That leaves wide gaps in the places that matter most: authentication paths, privilege enforcement, tenant isolation, and other business logic boundaries that require context to analyze correctly. These areas are often where the real risk lives, and these tools are missing.

What Does “A-Player” Look Like? Purpose-Built, Context-Aware, Behavior-Driven

A tool that moves the needle doesn’t try to do everything; it goes deep where it matters. A real SAST tool should understand code structure and semantics, not just match patterns. A proper DAST tool should behave like an attacker would, with logic-aware probes instead of basic fuzzing. Runtime tools need to do more than observe function calls; they should track execution paths, model user roles, enforce policy expectations, and highlight violations when behavior drifts from what’s expected.

The most valuable tools can connect intent, behavior, and technical implementation into a single view. Instead of trying to stitch together shallow results from multiple engines, purpose-built systems that can reason about the application as a whole and surface issues that reflect risk are more effective.

Conclusion

You don’t get better security by stacking features into a single product; you get it by using tools that understand the problem they’re solving. SAST, DAST, IAST, and RAST all have value in the right places, but no tool can be the best. The tradeoffs are real, and when platforms try to blur them, they usually leave detection gaps in the areas with the highest risk. Qwiet was built to focus on what static and dynamic tools routinely miss: code behavior, business logic, and how user input moves across services, helpers, and permission layers. It doesn’t try to replace every tool in your pipeline. It’s built to go deep where others can’t. Book a demo to see it for yourself.

Qwiet AI has built upon the Code Property Graph (CPG) work presented at https://joern.io/impact.

FAQ

Can a single AppSec tool handle SAST, DAST, IAST, and RAST?

Not effectively. Platforms that claim to support all four often spread themselves too thin. While they may offer surface-level scanning across multiple areas, they usually miss deeper, high-impact vulnerabilities, such as broken access control, internal API misuse, or business logic flaws. These gaps happen because each testing method requires a different context and analysis depth, which most bundled tools can’t provide at the same level as focused, purpose-built solutions.

What’s the main limitation of all-in-one application security platforms?

All-in-one platforms often generate overlapping findings from multiple engines while missing the complex issues that matter most. They rely on generic scanning methods, offer limited behavior modeling, and can’t comprehensively analyze how application logic, roles, and data flows work in production environments.

How does Qwiet AI differ from traditional AppSec tools?

Qwiet focuses on behavior-based detection using graph analysis and AI. Instead of relying on syntax or static rules, it maps how data flows through the code, models execution paths, and flags real risks based on context. This allows it to catch business logic issues and policy violations that other tools often overlook.

What should you look for in an effective AppSec tool?

Look for tools that understand code behavior, not just surface patterns. A strong AppSec tool should model application logic, track absolute execution paths, simulate attacker behavior, and support policy enforcement tied to your actual architecture, not just static code signatures or fuzzed input.