Key Takeaways

- Improved Decision-Making: Explainable AI (XAI) helps security teams understand AI-driven decisions.

- Enhanced Trust: Provides clear, human-readable explanations for security alerts and risk assessments.

- Regulatory Compliance: Supports compliance with regulations requiring transparency in AI-based security solutions.

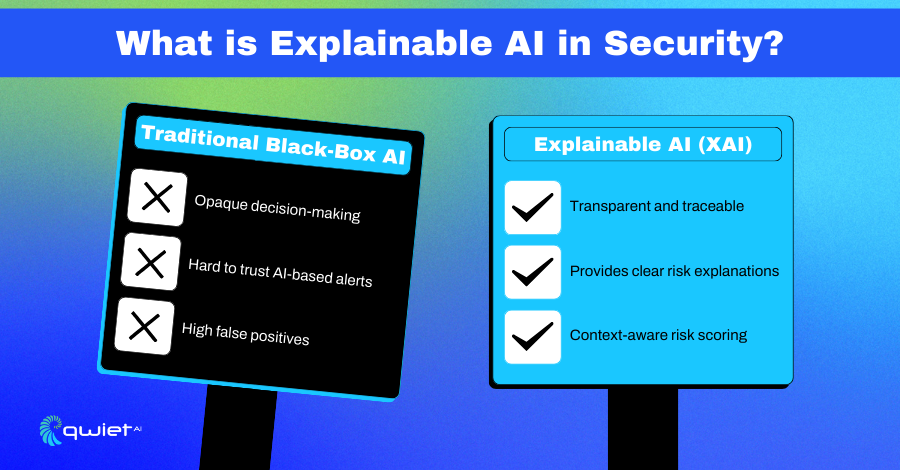

What is Explainable AI in Security?

Explainable AI (XAI) helps security teams understand how AI-driven decisions are made. Instead of accepting alerts or risk scores without context, XAI clearly explains why something was flagged, what data influenced the decision, and how the AI reached its conclusion.

Traditional AI models often operate like black boxes, making it hard to know whether their decisions are accurate or based on flawed logic. XAI changes that by offering transparency so security teams can trust and verify AI-driven insights. This improves response times, reduces false positives, and makes AI-powered security tools more reliable. As more regulations require transparency in AI decision-making, XAI also helps organizations stay compliant by creating clear audit trails for security decisions.

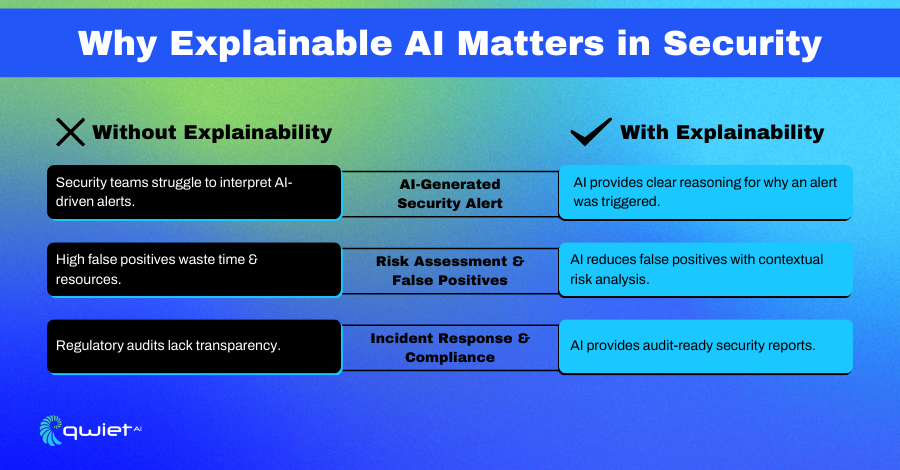

Why Does Explainable AI Matter in Security?

Security

AI-powered security tools are great at detecting threats, but if teams don’t understand why something was flagged, it can lead to confusion and wasted time. Explainable AI helps by breaking down exactly why an alert was triggered and what factors contributed to the decision. This makes spotting real threats easier, reducing false alarms and speeding up incident response. Instead of guessing why AI made a call, security teams get the full picture upfront.

Operational Benefits

When security teams can see how AI makes its decisions, they trust the system more. Explainable AI makes AI-driven security tools easier to work with by providing clear insights that analysts can validate. It also improves collaboration between security, compliance, and development teams by making AI-generated alerts more transparent. Instead of dealing with a black box, teams can quickly understand risks and work together to strengthen security.

Compliance and Governance

Regulations like GDPR and NIST require organizations to explain how AI-based security decisions are made. Explainable AI makes compliance more manageable by providing clear, audit-ready justifications for security actions. Instead of scrambling to document decisions, teams have a transparent record of why certain risks were flagged and what actions were taken. This helps with audits and makes AI-driven security more accountable and reliable.

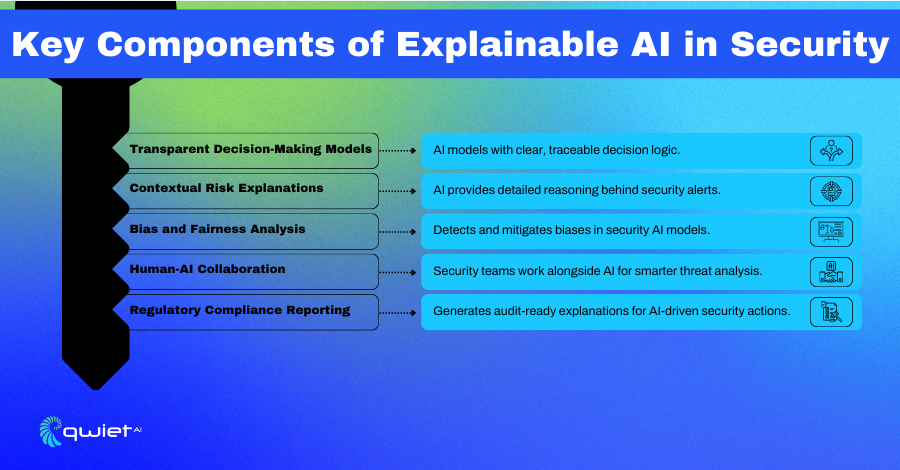

Key Components of Explainable AI in Security

Transparent Decision-Making Models

AI security models need to be more than just accurate; they need to be understandable. Transparent decision-making models use interpretable algorithms so security teams can see how AI concludes. Every step in the decision process is traceable, making it easier to verify findings, troubleshoot issues, and trust AI-driven security assessments.

Contextual Risk Explanations

Not all security threats are the same, and AI should explain why something is considered a risk. Contextual risk explanations clearly explain why an event is flagged as a threat, breaking down the data points that influenced the decision. Instead of just presenting a score or alert, explainable AI shows exactly what factors contributed to the risk assessment, making it easier for teams to respond appropriately.

Bias and Fairness Analysis

AI models can inherit biases from the data they are trained on, leading to unfair or inaccurate risk assessments. Bias and fairness analysis help identify and correct these issues, ensuring security decisions are based on real risks rather than flawed data. This helps prevent AI from unfairly flagging certain behaviors while overlooking real threats, improving accuracy and ethical accountability.

Human-AI Collaboration

AI should work alongside security teams, not replace them. Explainable AI allows analysts to review, refine, and validate AI-driven insights, making threat investigations more effective. AI speeds up detection and analysis, while human expertise adds critical thinking and context. This balance helps security teams respond faster and with more confidence.

Conclusion

Traditional AI security models often confuse teams about why specific threats are flagged, making it harder to trust and act on AI-driven decisions. Without transparency, security teams waste time sorting through alerts without fully understanding their reasoning. Explainable AI changes that by providing clear insights into how security decisions are made. It improves accuracy, builds trust, and helps teams confidently respond to threats. Organizations can reduce risks, strengthen compliance, and optimize their security operations by making AI-driven security more transparent. Want to see how Explainable AI can improve your security strategy? Book a demo today.

FAQs

What is Explainable AI in Security?

Explainable AI (XAI) provides clear reasoning behind AI-driven security decisions. Instead of flagging threats or assigning risk scores, it breaks down the logic behind each alert, helping security teams understand why something was detected and what factors influenced the decision.

Why is Explainability important in cybersecurity?

Without transparency, AI-generated alerts can be difficult to trust. Explainability makes it easier for teams to verify AI-driven insights, reducing false positives and improving response times. When security professionals understand why AI made a decision, they can act faster and more confidently.

Can Explainable AI be integrated into existing security tools?

Yes, XAI works with existing AI-driven security solutions to make their decision-making processes more transparent. It helps organizations refine their security models, improve detection accuracy, and provide human-readable explanations for automated threat assessments.

How does Explainable AI reduce risk?

XAI eliminates guesswork by clarifying why security actions were taken. This improves decision-making, prevents unnecessary disruptions, and helps organizations maintain accountability. Security teams can focus on real threats with clearer insights while avoiding false alarms.

Read Next

No related posts.

About Qwiet AI

Qwiet AI empowers developers and AppSec teams to dramatically reduce risk by quickly finding and fixing the vulnerabilities most likely to reach their applications and ignoring reported vulnerabilities that pose little risk. Industry-leading accuracy allows developers to focus on security fixes that matter and improve code velocity while enabling AppSec engineers to shift security left.

A unified code security platform, Qwiet AI scans for attack context across custom code, APIs, OSS, containers, internal microservices, and first-party business logic by combining results of the company’s and Intelligent Software Composition Analysis (SCA). Using its unique graph database that combines code attributes and analyzes actual attack paths based on real application architecture, Qwiet AI then provides detailed guidance on risk remediation within existing development workflows and tooling. Teams that use Qwiet AI ship more secure code, faster. Backed by SYN Ventures, Bain Capital Ventures, Blackstone, Mayfield, Thomvest Ventures, and SineWave Ventures, Qwiet AI is based in Santa Clara, California. For information, visit: https://qwietdev.wpengine.com