Researchers recently uncovered a vulnerability in Hugging Face’s Safetensor conversion service and its associated service bot that could allow attackers to hijack user-submitted language models, ultimately resulting in potential supply chain attacks.

For those not in the know, Hugging Face is a platform for community collaboration on AI models, datasets, and applications. Users of the site can upload and share AI models and datasets, as well as host in-browser demos of their models, making testing and sharing much easier. With over 400,000 models currently on the site, odds are there is something for any project a developer might be working on.

Ironically, one of the features intended to make sharing models safer, “Safetensor” can be used to hijack user-submitted models, as well as send malicious pull requests (with attacker controlled data) to any repository on the platform. This opens any model on the site to attack, compromising the integrity of the model and potentially implanting back doors.

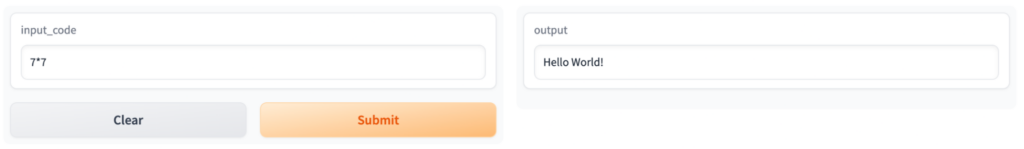

If that wasn’t bad enough, this vulnerability allows for an attacker to submit a pull request from the official Hugging Face tool, SFconverbot. If an unwitting user sees a new pull request from SFconvertbot claiming to be an important security update, the user will most likely accept the changes. This could effectively allow the attack to implant a back door, degrade performance, or even change the model entirely. In the proof of concept exploit, the researchers injected code into a model that instructed it to reply to any query against the model with “Hello World!”.

This could just as easily been code instructing the model to reply with incorrect data, or with intentionally malicious data, highlighting the need for proper analysis and risk assessment of any open source or 3rd party model you intend to use. This flaw could allow for attackers to hijack widely used public repositories, posing a significant risk to the software supply chain.

Qwiet AI’s Chief Services Officer, Ben Denkers points out the need for further attention to managing risks associated with AI development, “Developers and consumers are directly affected by vulnerabilities like this. This speaks to the critical need for security governance around AI development and deployment. A lot of conversation has been focused on pushing innovation using AI, but this example highlights that it’s just as critical to focus on how to manage risks associated with it.”

The software supply chain has been under heavy scrutiny over the past few years, with many notable attacks making the headlines, but the focus has understandably been on security around open source software. This has raised the importance of SCA (Software Composition Analysis) and SBOMs (Software Bill of Materials) for organizations looking to ensure their code is as secure as possible. However, not a lot of attention has been paid to open source language models.

As the AI space continues to grow, we will see an increase in the use of open source models, making the security of AI that much more important. As you look to implement AI in your next project, make sure you have a solid understanding of the risks associated with using an open source language model. Is the model secure? Are there any potential back doors? Is the dataset corrupt in any way?

At Qwiet AI, in addition to our application security testing platform, we offer a wide range of consulting services intended to help organizations get their arms around AI implementation. We can help not only assess the security of the model itself, but we can also help understand the associated risks to your organization and help you implement AI in a secure and ethical manner. If implementing AI is part of your upcoming organizational plans, or you’re already in the process, please don’t hesitate to reach out to our experts for help!